On a daily basis, you will typically follow the instructions and guidelines in this section to operate the BluVector System. You will need to first properly install and configure the system. See article: System Installation and article: System Configuration for more information on installing and configuring.

Most of your interaction with the system is likely to be through the ATD GUI. However, there are situations where you may prefer to carry out certain system operations using the command-line interface. All users may take advantage of SSH access.

Warning:

Following the instructions and guidelines in this section is key to the effective operation of the BluVector system. Operators are solely responsible for proper operation of this system.

The sections below provide more information on operating the system:

Section: Viewing and Managing Notifications

Section: Managing Dashboards

Section: Viewing Event Information

Section: Using the Threat Vector Workflow

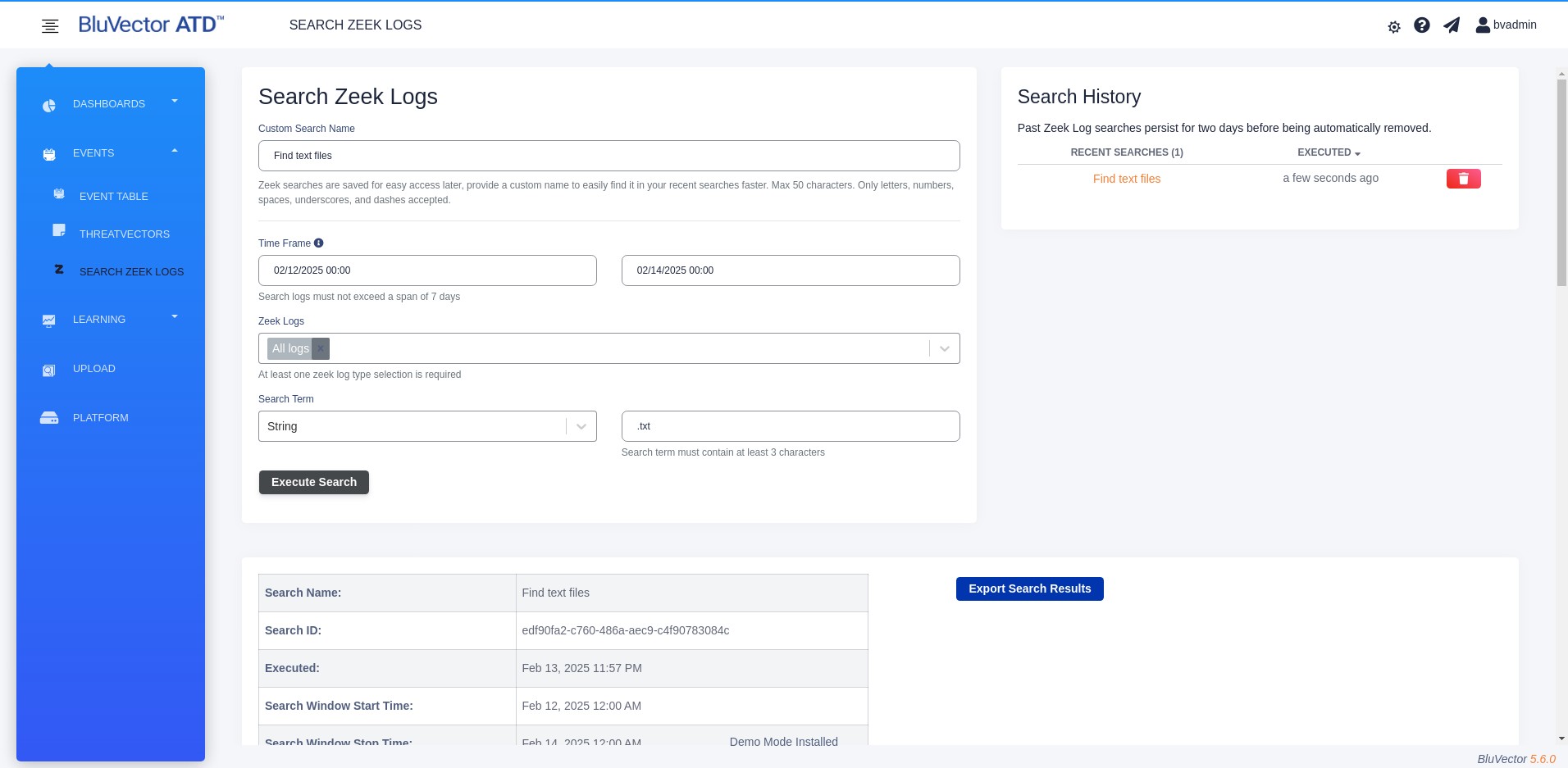

Section: Searching Zeek Logs

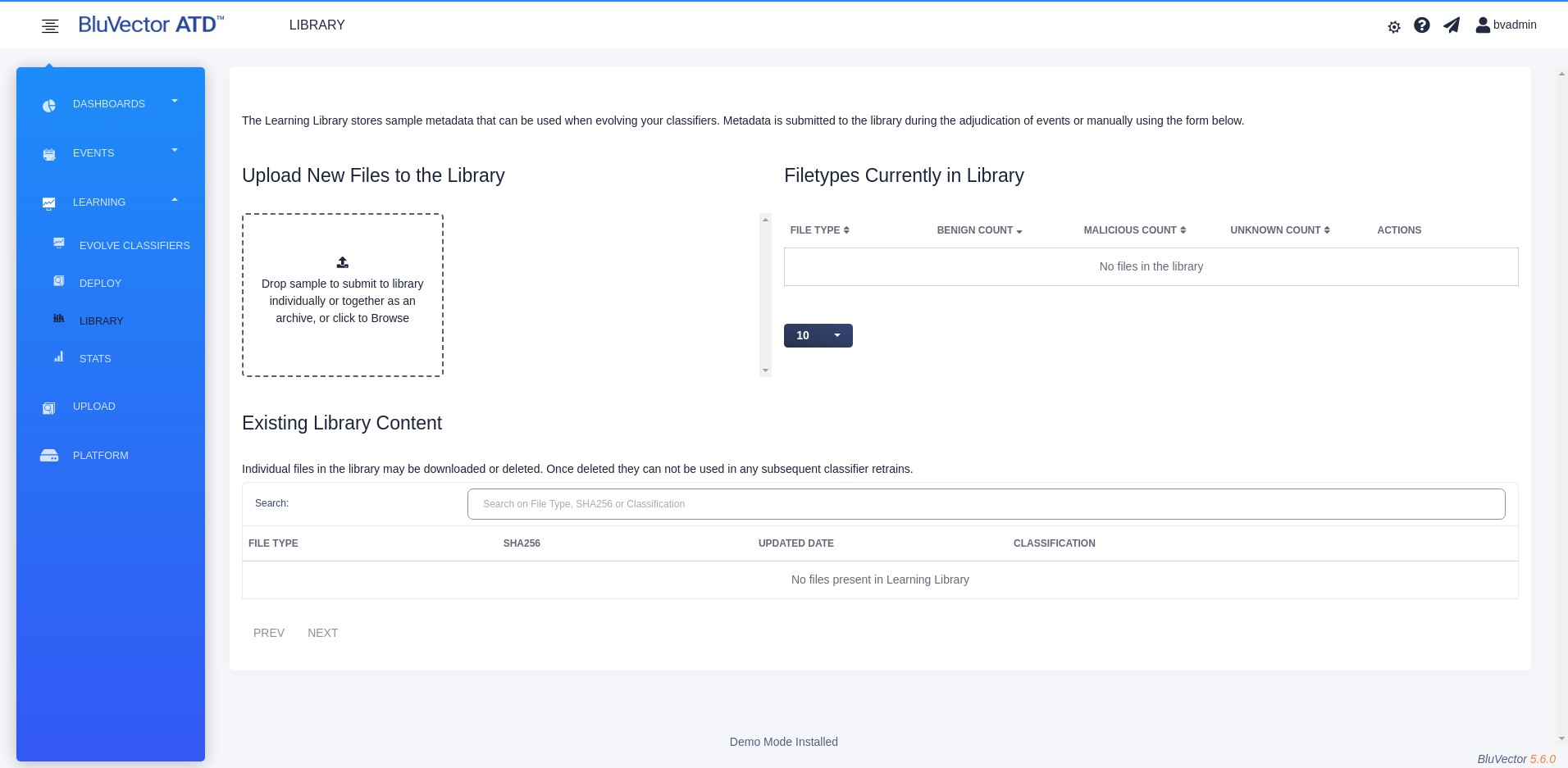

Section: Evolving Machine Learning Engine Classifiers through On-Premises Learning

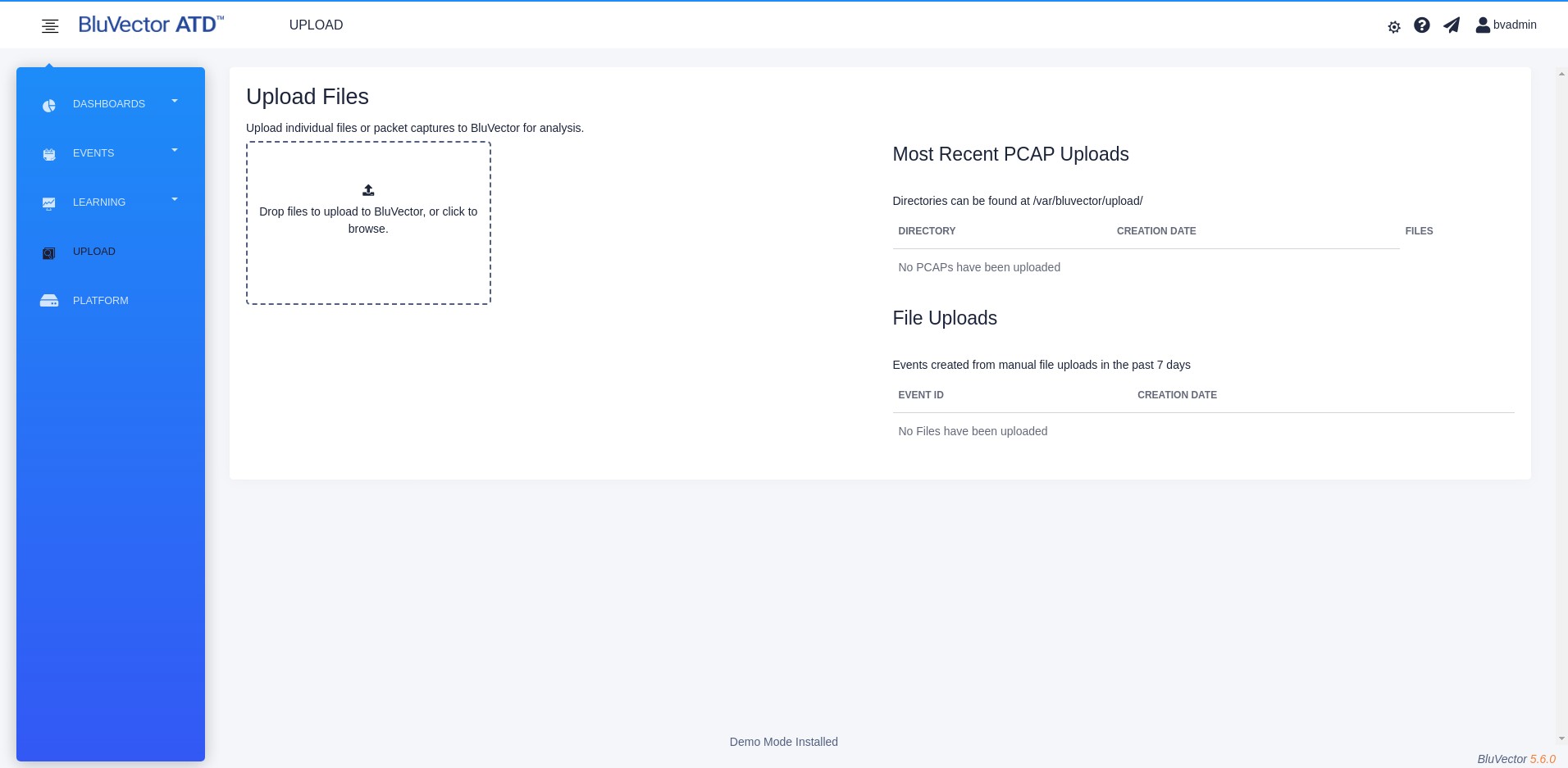

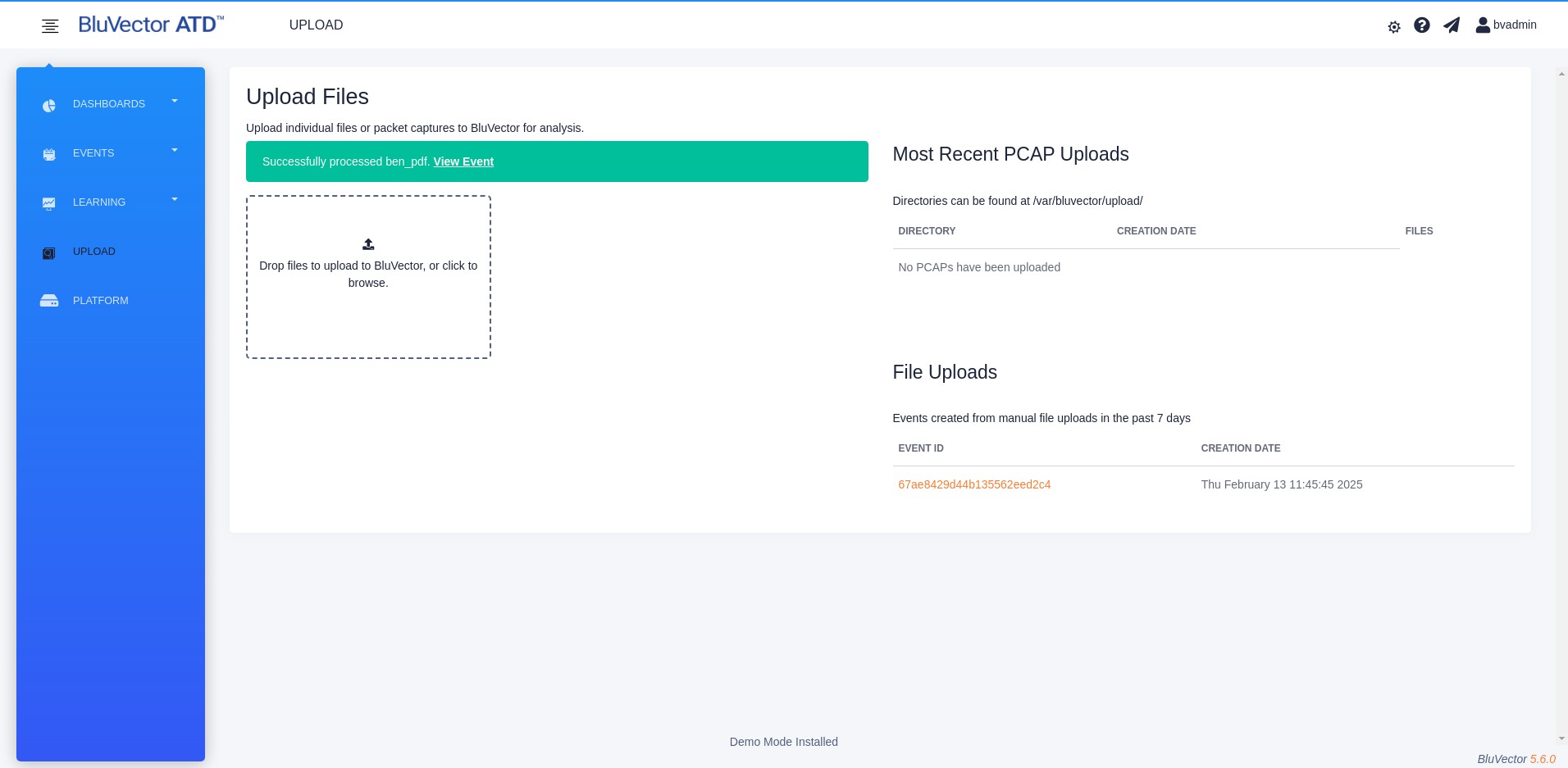

Section: Uploading Files

Section: Using Outputs

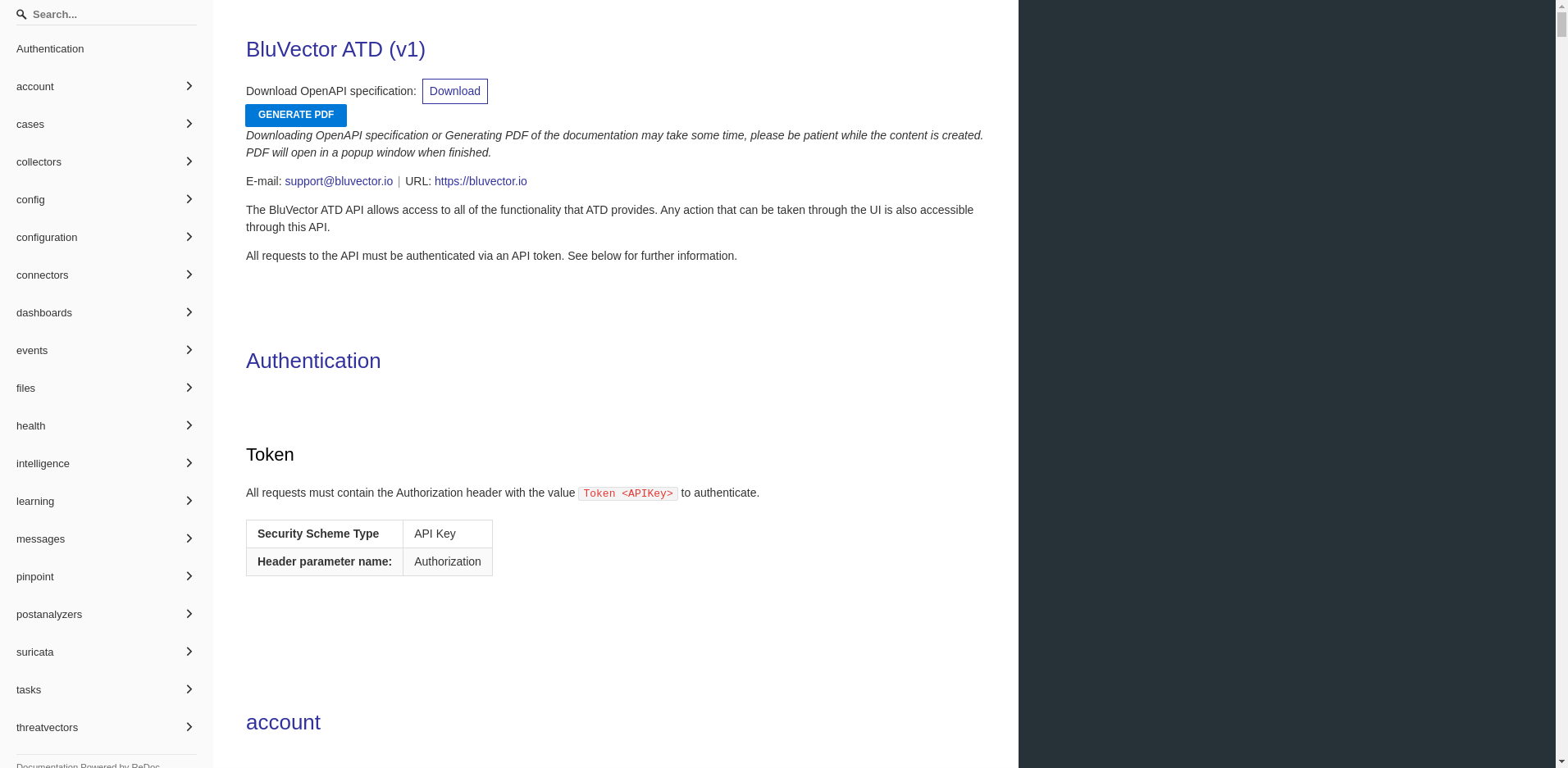

Section: Using the REST API

Section: Configuring SNMP Traps

Section: Updating License Files

Viewing and Managing Notifications

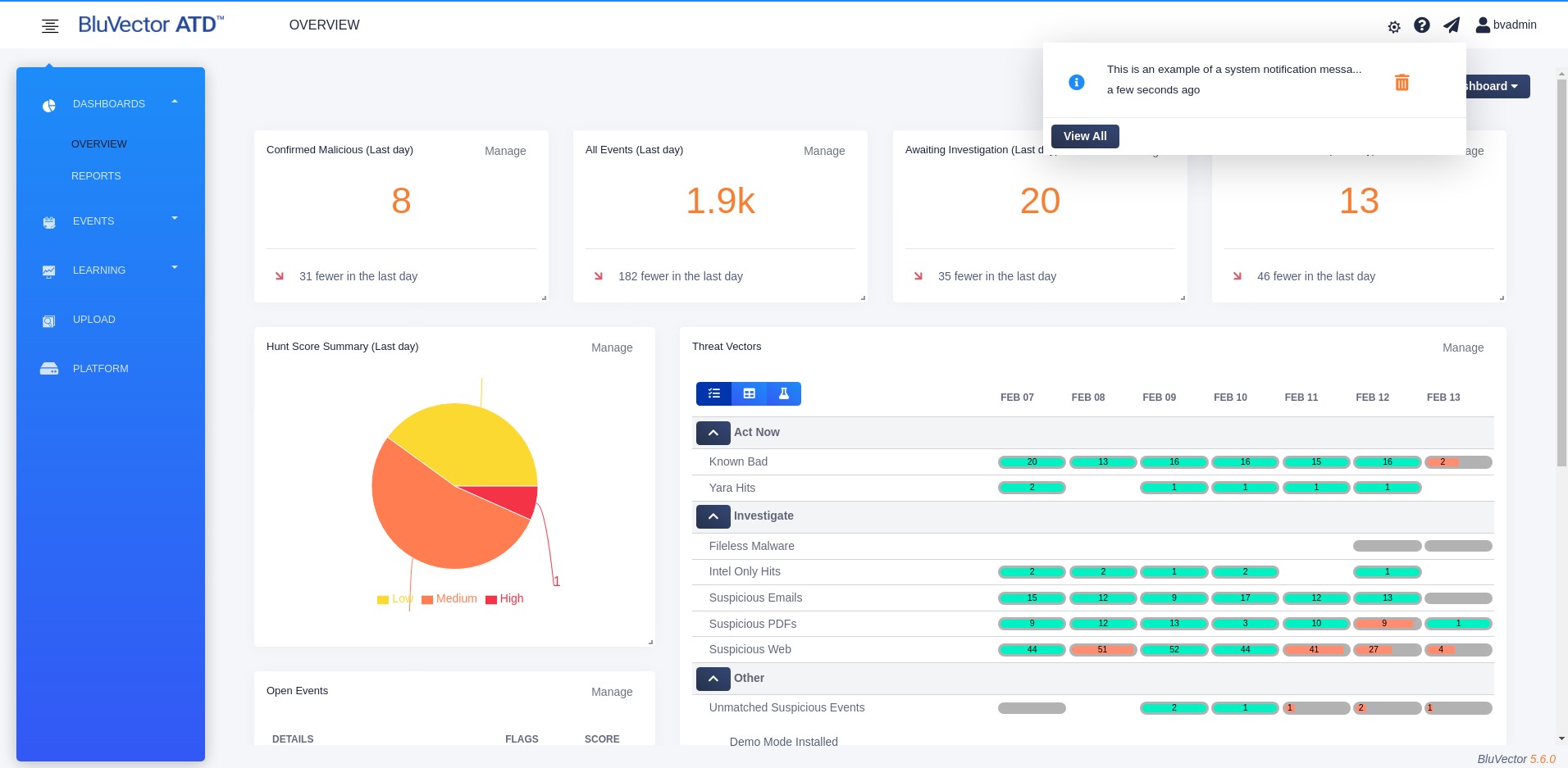

When there are system health alerts relevant to the logged in user, you can view and manage the notifications. The alert icon at the top of the screen will show a color dot indicator when there are messages to read.

To view the notifications, select the alert icon. A dropdown appears, showing the most recent messages (see Figure: Dropdown Displaying Most Recent Notifications).

From the dropdown, you can:

Select the trash can icon to remove a single message.

Fig. 9: Dropdown Displaying Most Recent Notifications

Select Dismiss All to remove all of the messages.

Select See All to bring up a screen showing all of the messages. The Notifications Screen appears (see Figure: Notifications Screen).

Managing Dashboards

When you log in to BluVector, a dashboard appears. The dashboard provides you with useful summaries of the events created by the system and interaction with those events. There are two default dashboards:

Overview Dashboard

Reports Dashboard

The dashboards provide an overview of analytic processing and a set of exportable reports. You can highly customize the dashboard and report system. You may also create additional custom dashboards, as well as configure which dashboard to show at login.

The following sections describe how you can manage the dashboards:

Section: Customizing Dashboards

Section: Using the Overview Dashboard

Section: Using the Reports Dashboard

Customizing Dashboards

The BluVector dashboards contain a set of configurable widgets. Each widget displays a certain type of information and can be independently moved and resized. The location and size of a widget are automatically saved after each change. You may want to create a new dashboard to customize the widgets in a certain configuration. The following procedures describe how to add and remove dashboards. To manage the widgets, see Section: Customizing Dashboard Widgets.

Procedure: Add a Dashboard

Follow these steps to add a new dashboard:

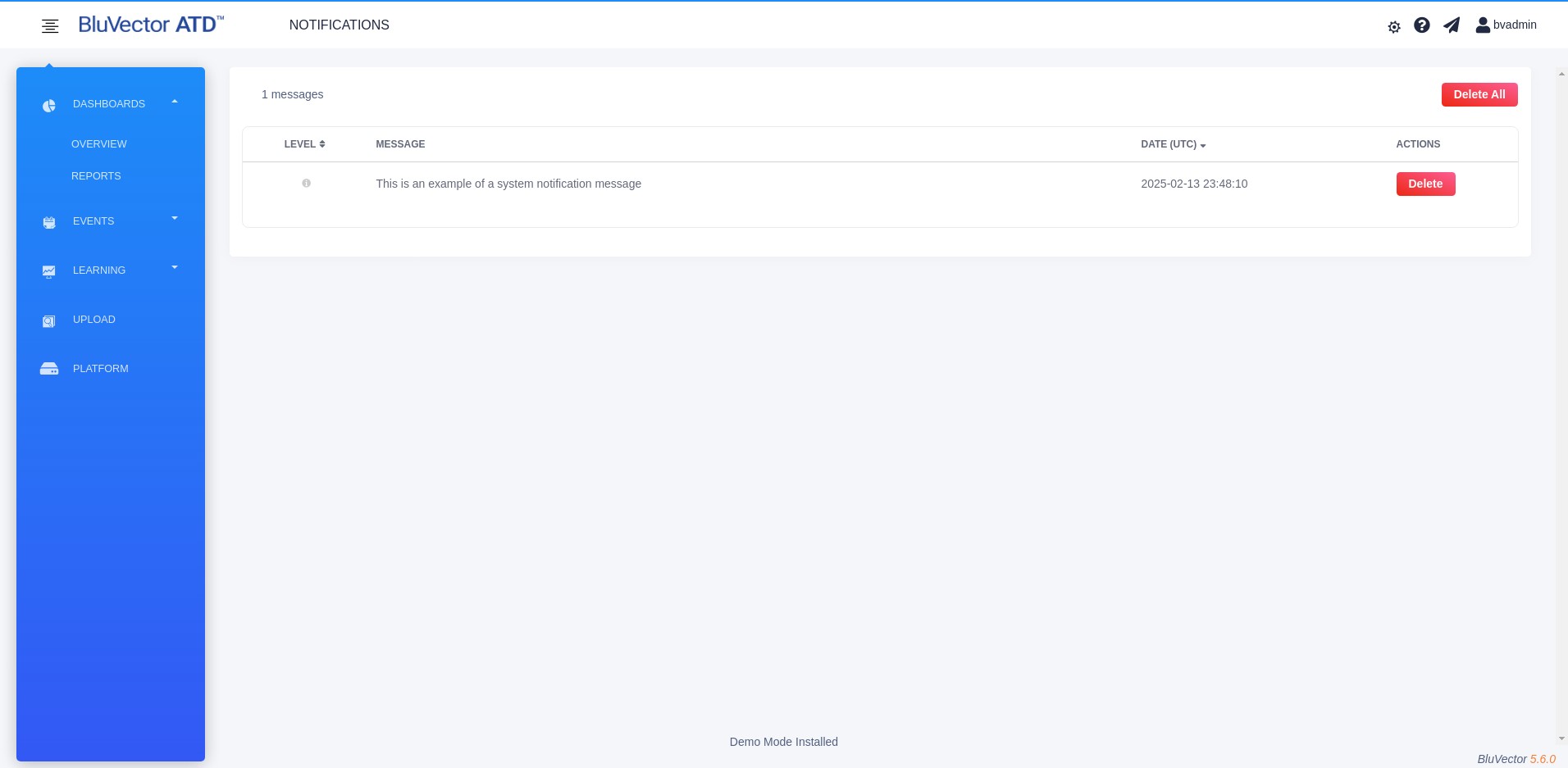

Fig. 10: Notifications Screen

The Notifications Screen displays a table with the following information:

Message

Date of the message

ACTIONS column

* To remove a single message, select Delete in the ‘ACTIONS’ column. To remove all of the messages, select Delete All at the top of the screen.

Log in to the ATD GUI (see Section: Logging into the ATD GUI).

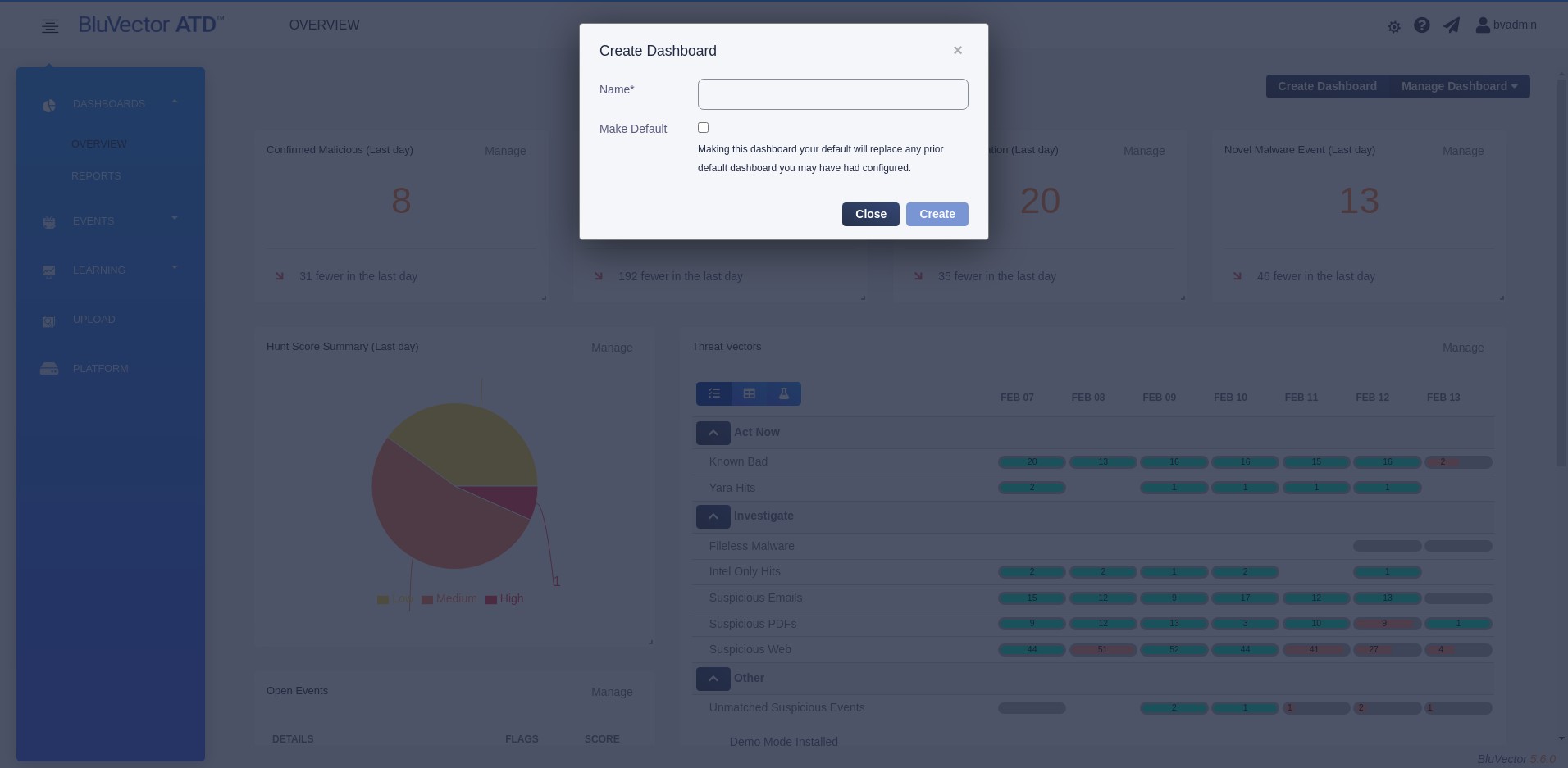

Select Create Dashboard. The “Create Dashboard” Screen appears (see Figure: Dashboard Create Screen)

Fig. 11: Dashboard Create Screen

Enter a Name for the dashboard.

If you would like it to be the default dashboard that is opened on system login, select Make default.

Select Create.

Procedure: Remove a Dashboard

Follow these steps to remove a dashboard:

Log in to the ATD GUI (see Section: Logging into the ATD GUI).

Select DASHBOARDS from the menu on the left side of the screen.

Select the dashboard that you wish to delete.

Select Manage Dashboard.

Select Delete Dashboard from the menu that appears.

Select Delete.

Customizing Dashboard Widgets

There are many different types of widgets available for use in the BluVector dashboards. Most widgets are based on common use cases or popular reports. The Basic Widget and the Event Field Frequency Widget are the most customizable, and they display many different metrics captured by the system. Some widget types can export related data as a comma-separated value (.csv) file.

The following table describes the widget types. The procedures following the table describe how to add, move, resize, and remove widgets.

Widget Type | Description |

|---|---|

Activity Feed | Shows the most recent activity for certain actions within the system, along with the user who made the change. |

Average Time To Adjudication | Shows the mean time from when events are created to when either the user or system changes their status from Suspicious to Trusted or Malicious. |

Count Trend | Shows the count of all events, events awaiting adjudication, malicious events, or novel malware, along with a trend indicator showing whether the number has increased or decreased over the last configured time period. |

Data Flow | Shows a sankey diagram of event flow to the various analyzers in the system. |

Event Field Frequency | Provides a top/bottom N table for any event metafield. |

Event Status | Shows a breakdown of event status in the system. |

Geo Location | Displays a world graph of traffic for each country that is acting as either the source or destination. |

Hunt Score Summary | Shows a breakdown of event scores in the system, as either a pie chart or a bubble chart. |

Ingest Metric | Shows ingest-related statistics across collectors on an ATD Central Manager or on a standalone BluVector Collector. You can select whether to display Bits or Packets, as well as whether to show Received, Dropped, or both. The graph displays the requested data over time, which can be configured as the Last Day, Last Week, Last Month, or Last 60 Days. When the widget is displayed on an ATD Central Manager, the data will contain an entry for every BluVector Collector. |

Logged In Users | Shows the list of currently logged in users. |

Open Events | Shows a list of the latest suspicious events created by the system, sorted by their Hunt Score. |

Threat Vectors | Shows a breakdown of events in the configured Threat Vectors and progress bars, indicating how many have been adjudicated. |

User Adjudications | Shows a table of which users have adjudicated events. |

The following procedures describe how to add, move, resize, and remove widgets.

Procedure: Add, Move, or Resize a Widget

Follow these steps to add, move, or resize a widget:

Log in to the ATD GUI (see Section: Logging into the ATD GUI).

Select DASHBOARDS in the menu on the left side of the screen.

Select the dashboard you wish to edit.

Select Manage Dashboard.

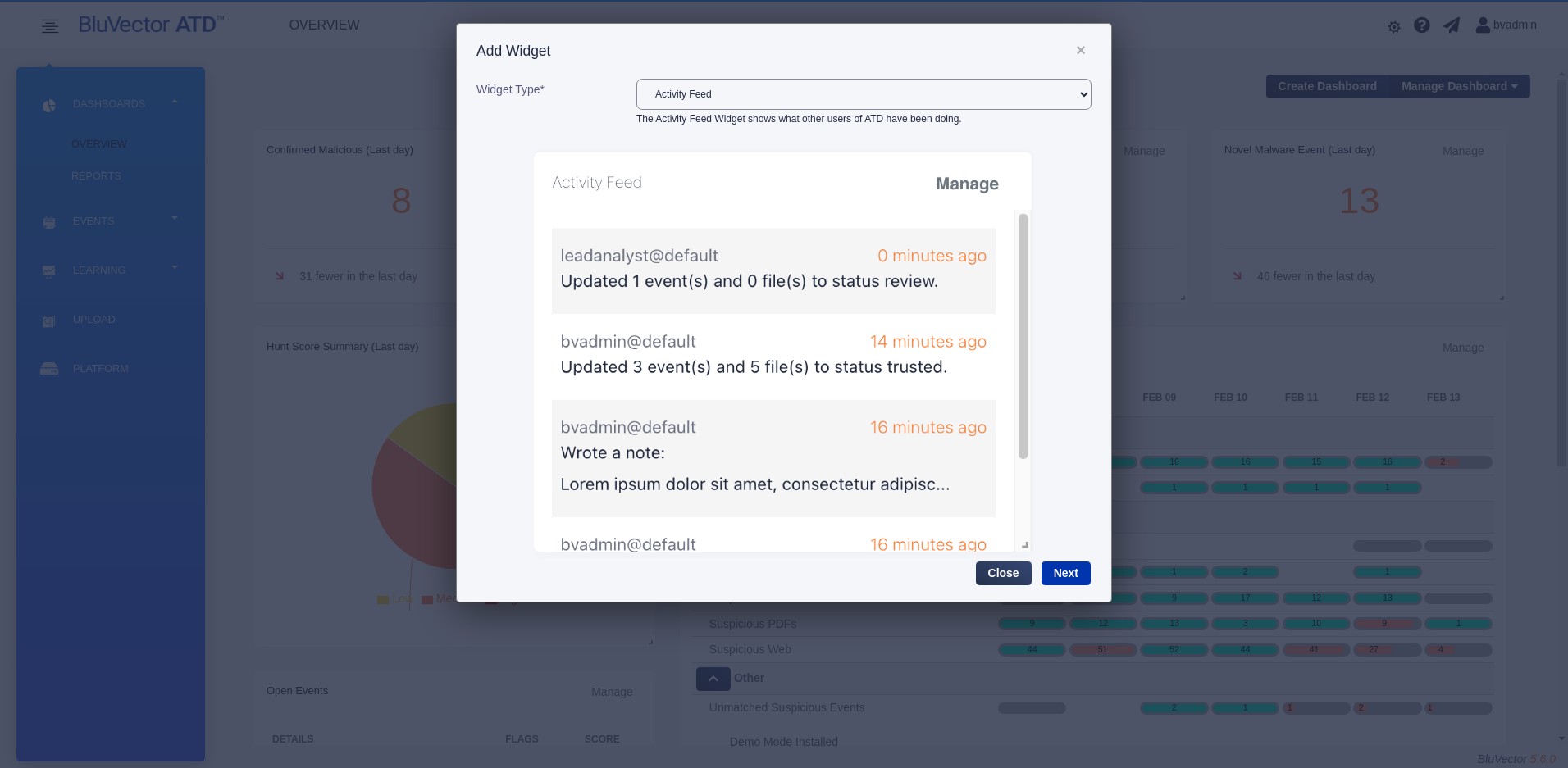

Select Add Widget from the menu that appears. The “Add Widget” Screen appears (see Figure: Add Widget Screen).

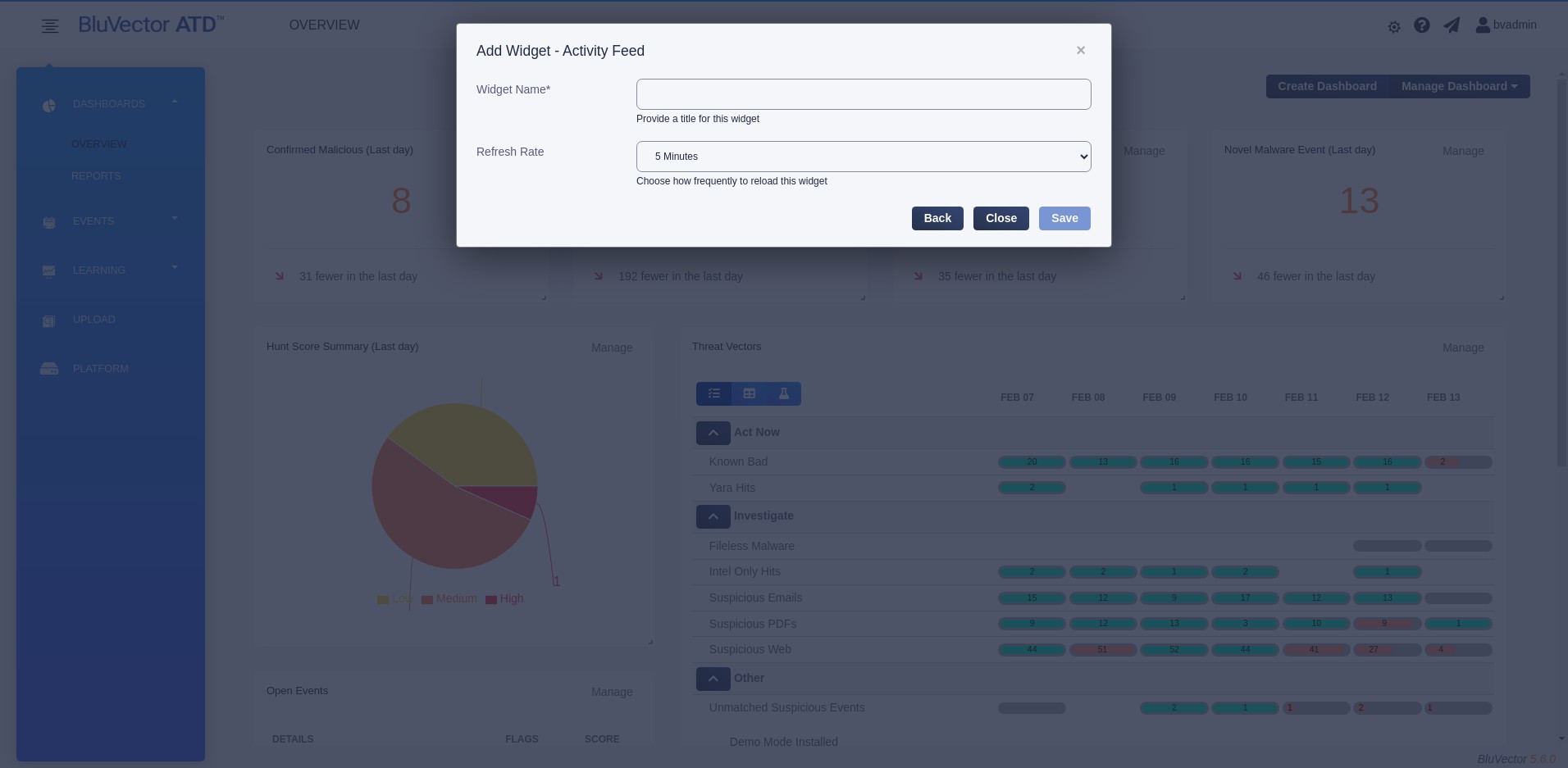

Fig. 12: Add Widget Screen

Select the desired widget type from the menu.

Select Next. The “Add Widget Details” Screen appears.

Configure the widget. Configuration settings vary, depending on the widget type. All required fields are marked with an asterisk (*). See Figure: Add Widget Details Screen for an example configuration screen.

Fig. 13: Add Widget Details Screen

Select Save.

To move the widget, select and hold within the widget title area and drag the widget to its new location.

To resize the widget, move the mouse to the widget’s lower right corner, then drag the corner to the new desired size.

Procedure: Remove a Widget

Follow these steps to remove a widget:

Log into the ATD GUI (see Section: Logging into the ATD GUI).

Select DASHBOARDS in the menu on the left side of the screen.

Select the dashboard you wish to change.

Select Manage on the widget that you wish to remove.

Select Delete from the menu that appears.

Using the Overview Dashboard

The Overview Dashboard is one of the default dashboards available in the ATD GUI. To bring up the Overview Dashboard:

Expand the DASHBOARDS section in the left-hand menu.

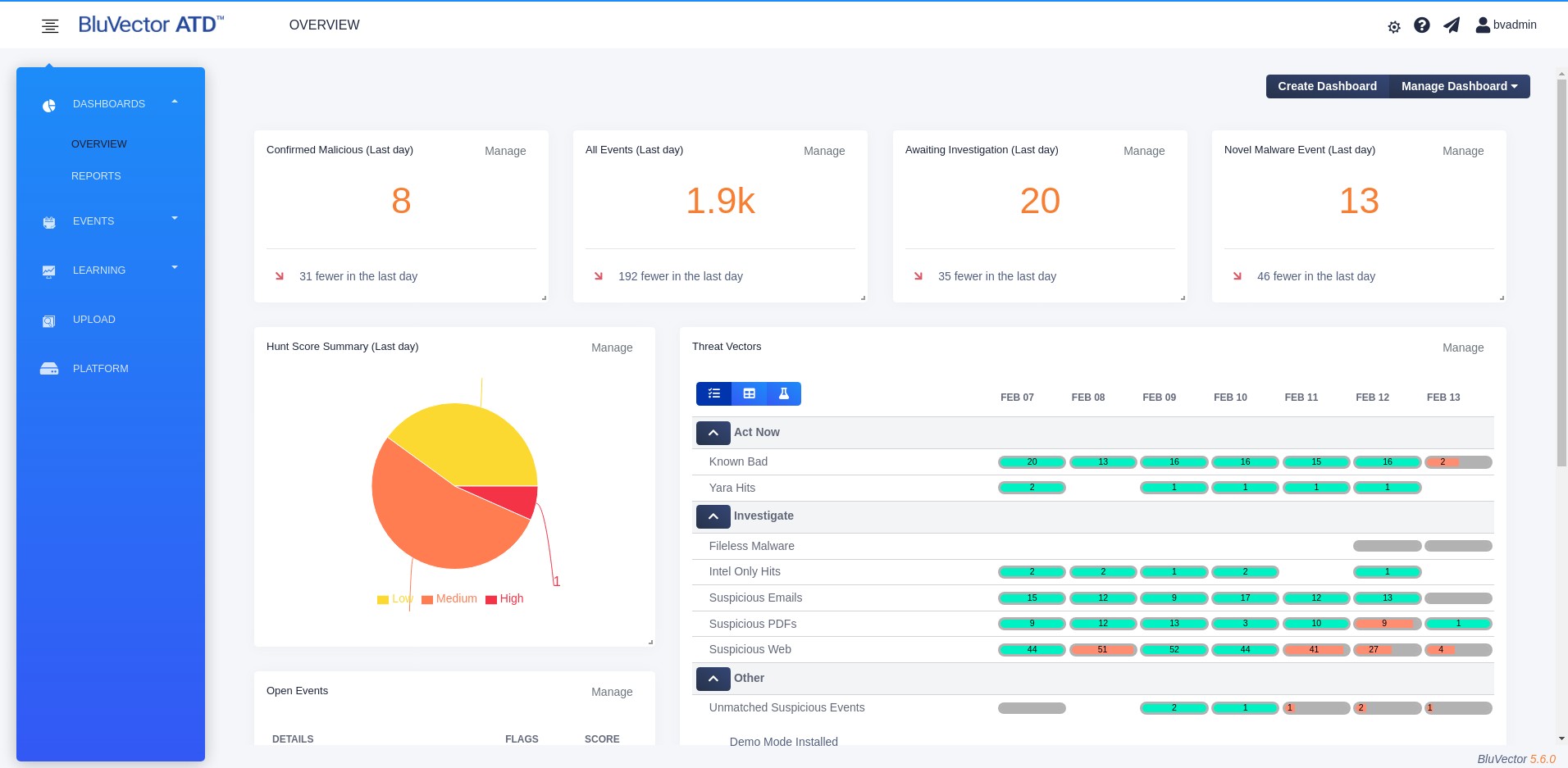

Select OVERVIEW. The Overview Dashboard appears (see Figure: Overview Dashboard).

Fig. 14: Overview Dashboard

The Overview Dashboard provides a summary of the BluVector Grid, as well as the analytic work performed on the local BluVector Sensor (or the entire BluVector Grid if viewed from an ATD Central Manager with event forwarding configured). The local BluVector Sensor is the one that you are logged into. The other BluVector Sensors in the BluVector Grid are considered remote BluVector Sensors. You may select a BluVector Sensor in the grid and drill down to receive additional health and status metrics.

The Overview Dashboard displays summary statistics about the number of events observed by the local BluVector Sensor, the number awaiting adjudication, the number determined by the user to be malicious, and the number of novel malware events discovered. Novel malware is malware or ransomware identified by BluVector that has no signature. If you are upgrading from a previous version of BluVector ATD, you can add the Novel Malware Events widget to your Overview Dashboard through the dashboard configuration capability (see Section: Customizing Dashboard Widgets).

Other sections of the Overview Dashboard include:

Threat Vectors - Indicates the size and percent completion of each Threat Vector over the last seven days.

Hunt Score Summary - Provides a visualization of events by Hunt Score bins. It displays the relative quantity of events in a variety of Hunt Score range bins over the last 24 hours. Select any segment of the pie chart to bring up an event table listing all events in that segment.

Open Events - Shows a breakdown of recent events by event status and the top open events, prioritized by Hunt Score. It provides easy access to the latest and highest priority events. Select any event summary to bring up the detailed view of that particular event.

You can access, evaluate, and adjudicate events through any of these methods in the ATD GUI:

Threat Vector View – The specialized Threat Vector View allows you to quickly focus on a subset of contextually-similar, flagged content that is broken out on a daily basis for the past seven days.

Event Viewer – The Event Viewer provides access to all events on the system, as well as methods for querying and drilling-down into the event database.

Directly viewing a single event – from either an external URL (such as one provided by BluVector to SIEMs) or from the top Open Events widget.

Viewing the Threat Vectors Section of the Overview Dashboard

The Threat Vectors section of the Overview Dashboard displays a table that summarizes the work performed and the work remaining to be done by an analyst. The work consists of suspicious records that are collected into categories called Threat Vectors. The information in this section matches the Threat Vectors Overview. See Section: Viewing the Threat Vectors Overview for a description of this information and see Section: Using the Threat Vector Workflow for an explanation of the full Threat Vector Workflow.

Using the Reports Dashboard

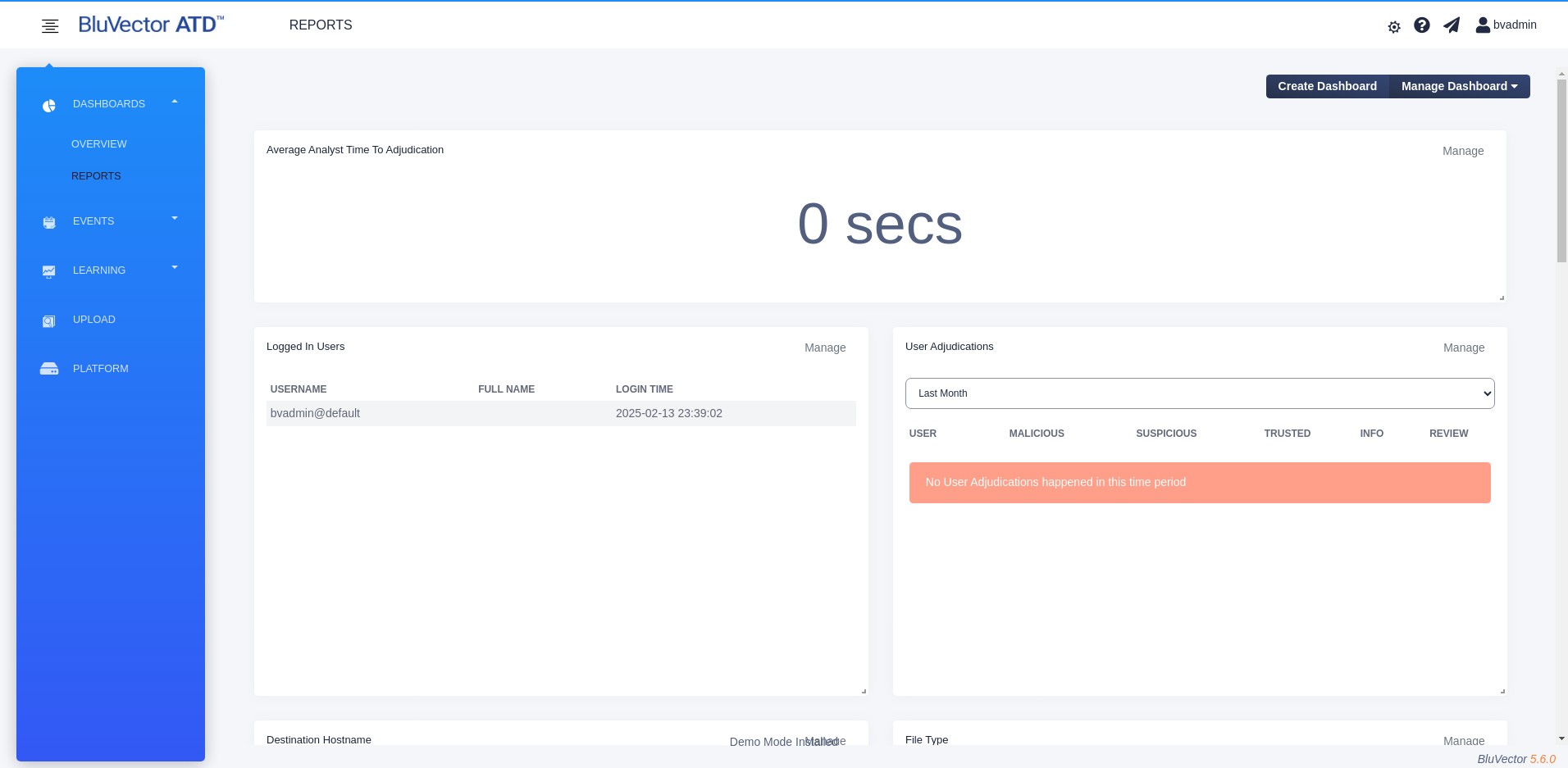

The Reports Dashboard is one of the default dashboards available in the ATD GUI. To bring up the Reports Dashboard:

Expand the DASHBOARDS section in the left-hand menu.

Select REPORTS. The “Reports Dashboard” appears (see Figure: Reports Dashboard).

The screen displays many preconfigured report widgets. You can export any of the tabular reports as a comma-separated values (.csv) file. Exported reports include the counts for all unique values for the metafield over the configured time range and event status. The default report widgets are:

Destination Hostname

File Type

Suspicious Network Events

Malware Engine

Source IP

Destination IP

Emails To

Emails From

User Adjudications

Logged in Users (shows currently logged in users)

Fig. 15: Reports Dashboard

In addition, the Average Analyst Investigation Time over the last seven-day window will be reported. Analyst investigation time is the duration of time between the generation of a suspicious event and an analyst adjudicating that event as either Malicious or Trusted. Analysts will be routinely moving events from Suspicious to either Trusted or Malicious status, indicating that they have adjudicated the potential threat. Events that have not been adjudicated are not included in the calculation.

Note:

Reports are calculated over the events generated by BluVector. The statistics represent the frequency of the traffic containing content that BluVector has analyzed. These reports are not representative of all traffic observed and processed by the BluVector Sensor.

Viewing Event Information

You can view, query, and traverse all events and telemetry metadata that is generated by the BluVector System. Many options are available from the Event Viewer (see Section: Using the Event Viewer). The Event Viewer supports searching for events in multiple ways.

In addition, the “Search Zeek Logs” Screen (see Section: Searching Zeek Logs) assists in searching the history of all network transactions observed by BluVector. You can use it to find evidence of traffic related to important indicators (such as filenames, hashes, IP addresses, and hostnames), even if no event is produced by BluVector.

The following sections explain more about using the Event Viewer to view event information:

Section: Using the Event Viewer

Section: Using the Event Details Screen

Section: Using Field Enhancers

Section: Understanding Data Schemas

Section: Downloading Files and Data

Section: Learning about BluVector Dynamic Malware Analysis in the Cloud

Using the Event Viewer

The Event Viewer displays events as soon as they are collected and analyzed.

To bring up the Event Viewer:

Log in to the ATD GUI (see Section: Logging into the ATD GUI).

Select EVENTS from the menu on the left.

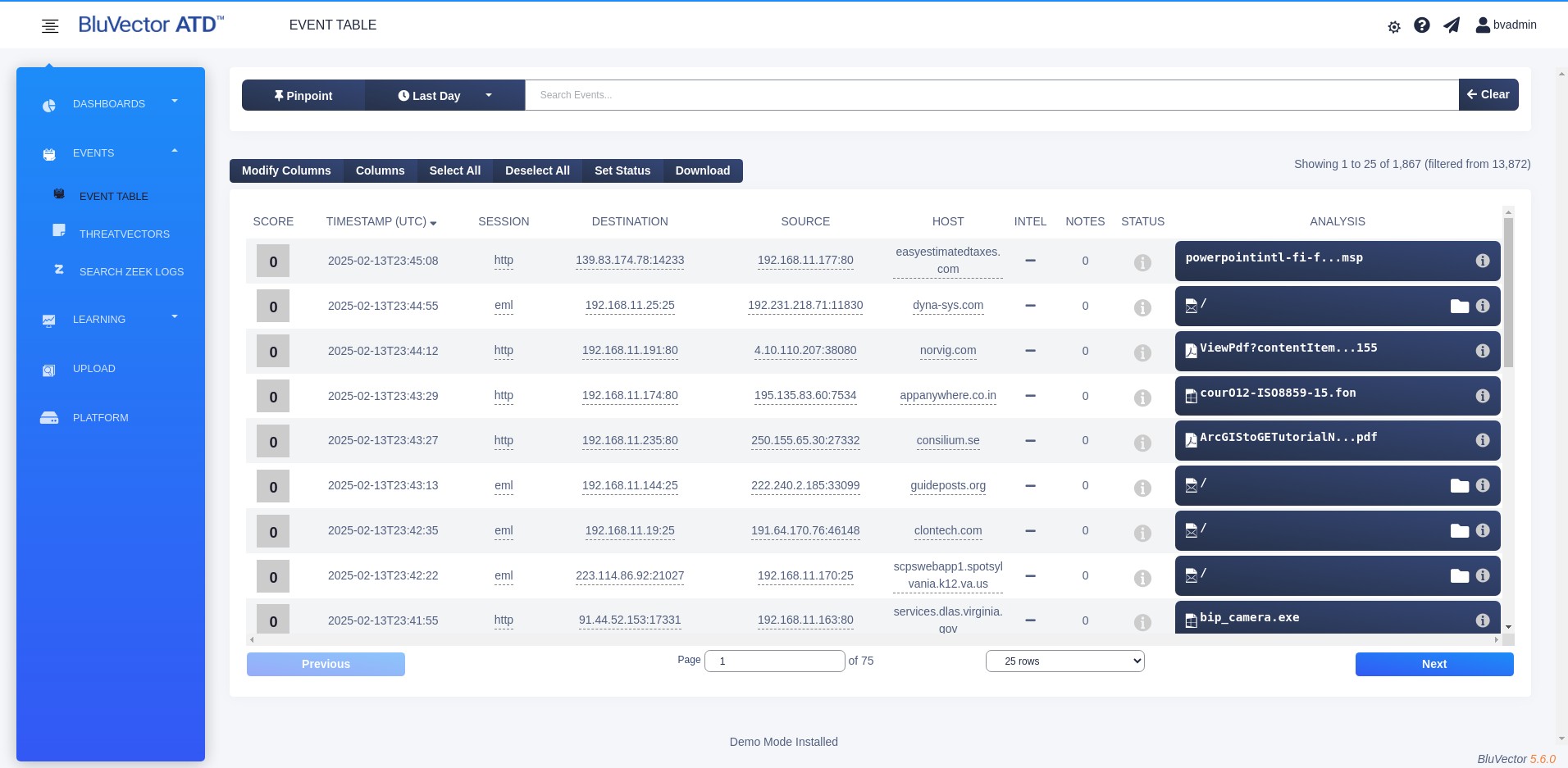

Expand the menu, then select EVENT TABLE in the submenu. The Event Viewer appears (see Figure: Event Viewer).

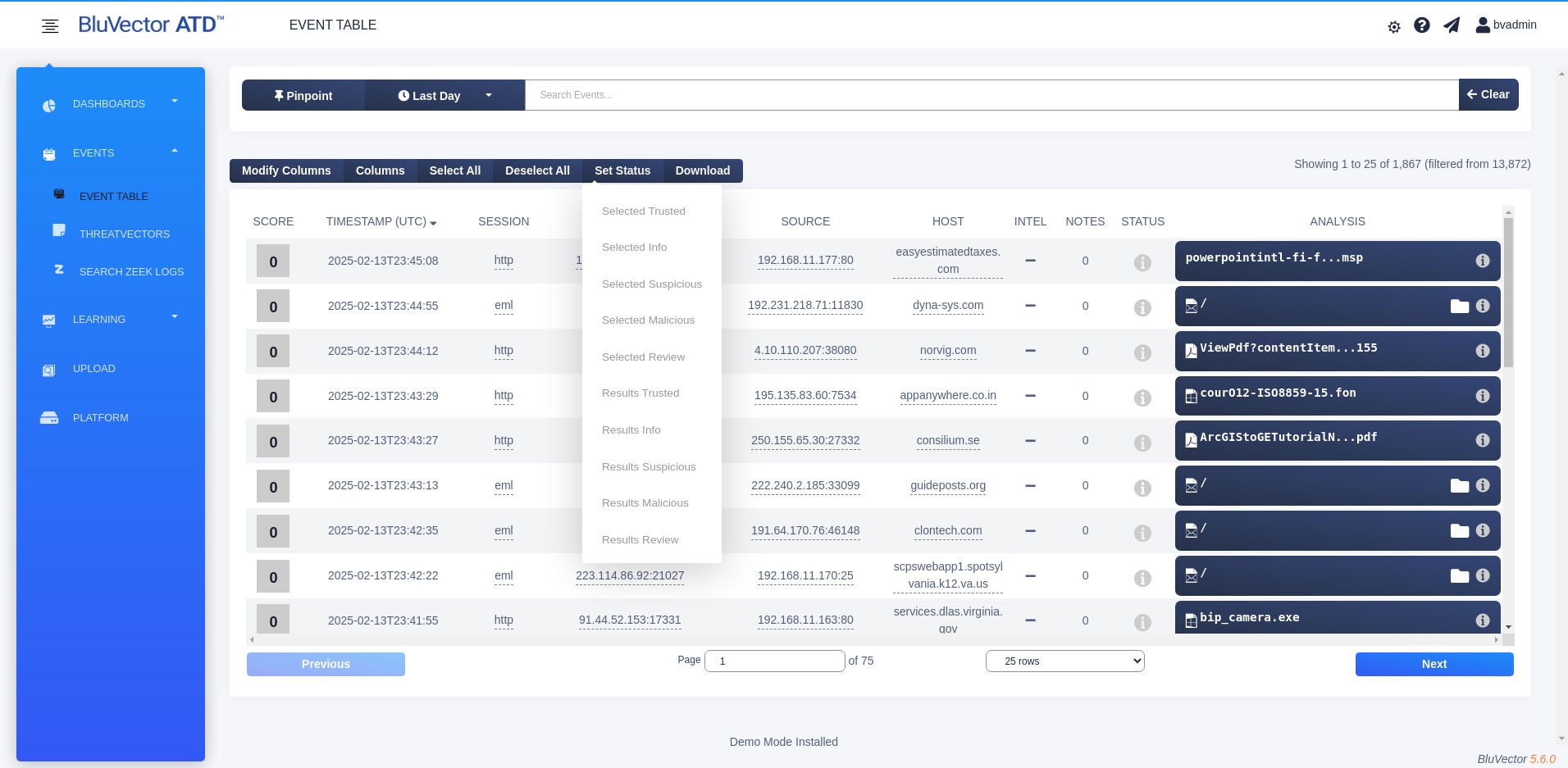

Fig. 16: Event Viewer

Each row in the Event Viewer represents a single event. If there are more events than will fit on the screen, select Previous and Next to navigate through the pages of data. Each row includes a summary of the most relevant event metadata, such as the event score, IP addresses, geolocation information, hostname, timestamp, and associated content.

Next to the destination and source IP addresses, a flag may appear for the country associated with the event. You can move the mouse cursor over a flag to see the name of the country.

You can select fields that are underlined for more options (see Section: Using Field Enhancers).

For an explanation of the schema that represents events and files, see Section: Understanding Data Schemas.

The default columns in the Event Viewer include:

SCORE – displays the event’s Hunt Score. Hunt Scores represent the priority an event should have for review. The scores do not represent estimates of event impact on the organization or sophistication of any potentially malicious content. All suspicious events should be reviewed. Scores range from 0 to a configurable maximum score (the default is 10), with higher scoring indicating events worthy of greater prioritization.

INTEL – displays a checkmark if an intelligence provider has flagged any of the metadata within an event or its associated files. You will need to view the event metadata or the file metadata to see which intelligence provider flagged which criteria.

NOTES – shows the number of user-created notes associated with that event.

STATUS – indicates the status of each event. The event status is the most important part of each event. It is indicated by an icon and is set by either the BluVector Sensor or a user. You can change the event status from this screen. The event status is different from a file status.

The event status can be one of the following:

Trusted (green check mark) – indicates that the event is trusted.

Info (gray circle containing a black “i”) – indicates that the event metadata is provided for information only, and you do not need to adjudicate this event.

Suspicious (orange exclamation mark) – indicates that the event is suspicious.

Malicious (red “X”) – indicates that the event is malicious.

Review (blue flag) – indicates that the event should be reviewed in more detail by an analyst.

By default, events are automatically marked as Suspicious when they are flagged by a BluVector analyzer (for example, if they are above the Machine Learning Engine malicious threshold, match a Yara rule hit, or match a ClamAV signature). You may expand the flagging criteria in the general configuration for analyzers (see Section: Configuring General Analyzer Settings for more information).

Events will be automatically marked as Info if the analysis results do not match any of the flagging criteria.

You may configure the system to automatically set event statuses based on user-defined criteria. For example, you might specify to trust any event from the hostname www.microsoft.com. You can accomplish this from the “Event Workflow Configuration” Screen. See Section: Configuring Event Rules for more details.

For additional information on changing the status of an event or file, see Section: Changing the Status of Events and Files.

ANALYSIS – displays an icon of the filetype, the analyzers that have flagged the content (if any), the name of the file, and a status symbol representing the status of the file. There is usually at least one file associated with each event. An event without associated files typically occurs when the intelligence correlation engine or Suricata matches a network stream that does not contain a file. If an event has more than one file associated with it, a folder icon will appear to the right of the filename.

To view more detailed metadata and analysis of all relevant event metadata, protocol headers, and associated content, select an item under the ANALYSIS column (see Section: Using the Event Details Screen for more information).

Filtering Events

You can filter the events displayed in the Event Viewer through these methods:

Search bar – you may enter a search in the box at the top of the screen to manually query event and file metadata. The search bar contains an autocomplete feature that is consistent with the event schema. You can also save interesting queries. See Section: Searching Events with Queries for more information.

PinPoint – this utility provides a flexible mechanism to identify and drill down on events. To access PinPoint, select Pinpoint in the menu at the top of the screen. The PinPoint sidebar then appears to the left of the events table. For more information about using PinPoint, see Section: Sorting with PinPoint.

Selecting underlined data – You can modify which events are displayed by selecting a field that has a dotted underline (see Section: Using Field Enhancers). A menu will appear with options for searching based on the data.

Customizing the Event Viewer Columns

You can customize some of the ways that the Event Viewer presents data, such as by selecting which columns of information you would like to view and the order in which they appear. You can also add custom columns.

The following procedures describe how to customize the Event Viewer columns.

Procedure: Temporarily Choose the Columns in the Event Viewer

Follow these steps to temporarily change which columns appear in the Event Viewer:

Log in to the ATD GUI (see Section: Logging into the ATD GUI).

Select EVENTS in the menu on the left.

Select EVENT TABLE from the submenu. The Event Viewer appears (see Figure: Event Viewer).

Select Columns, which is located above the table of events. A menu of column options appears.

Select column names to enable or disable them.

To exit this editing mode, select anywhere outside of the menu.

The columns will be reset when you reload the screen.

Procedure: Make Persistent Customizations for the Event Viewer

Follow these steps to make persistent changes to the Event Viewer.

Log in to the ATD GUI (see Section: Logging into the ATD GUI).

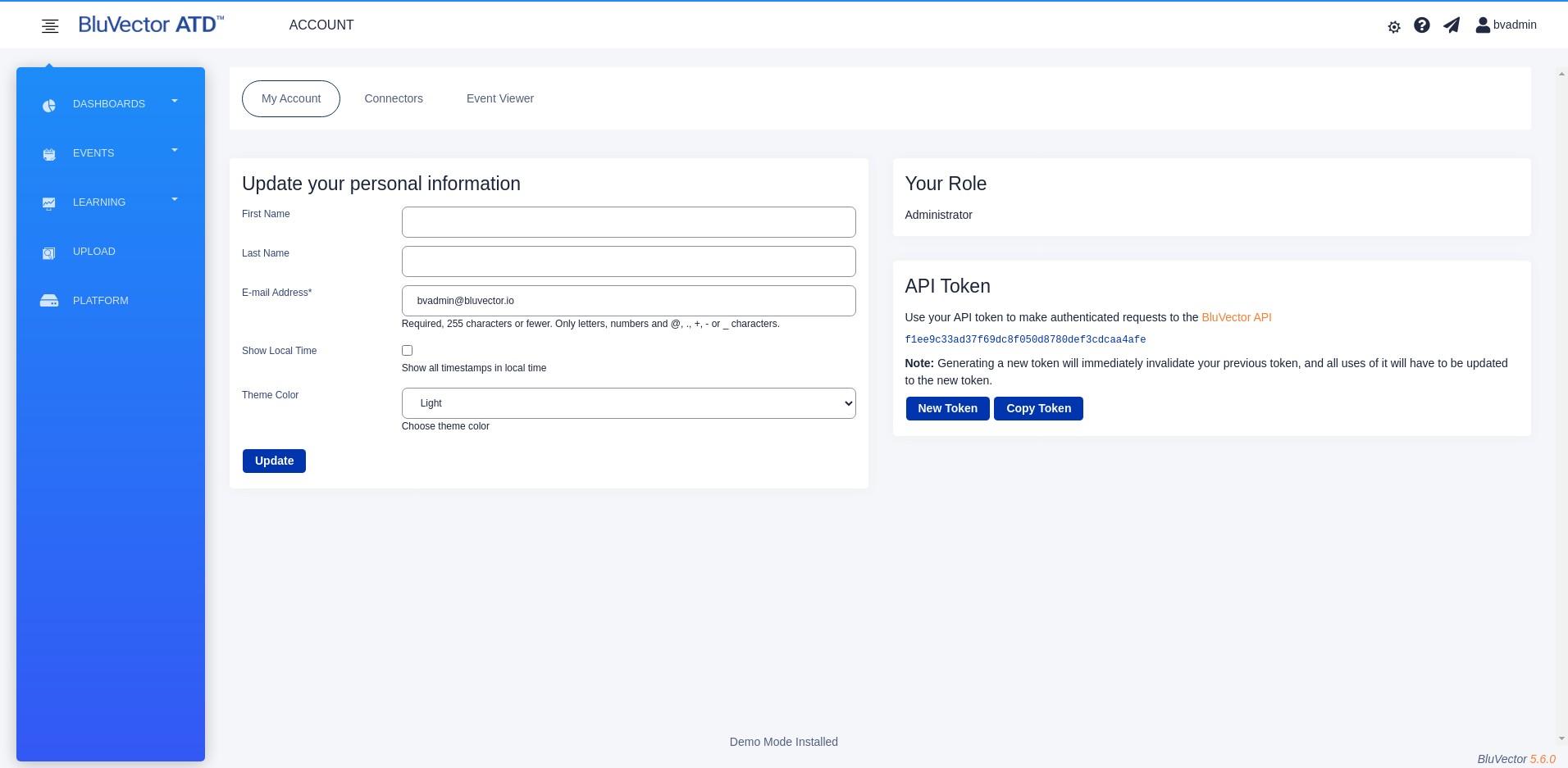

From the ATD GUI, select your user name in the upper right corner. A menu appears.

Select Account.

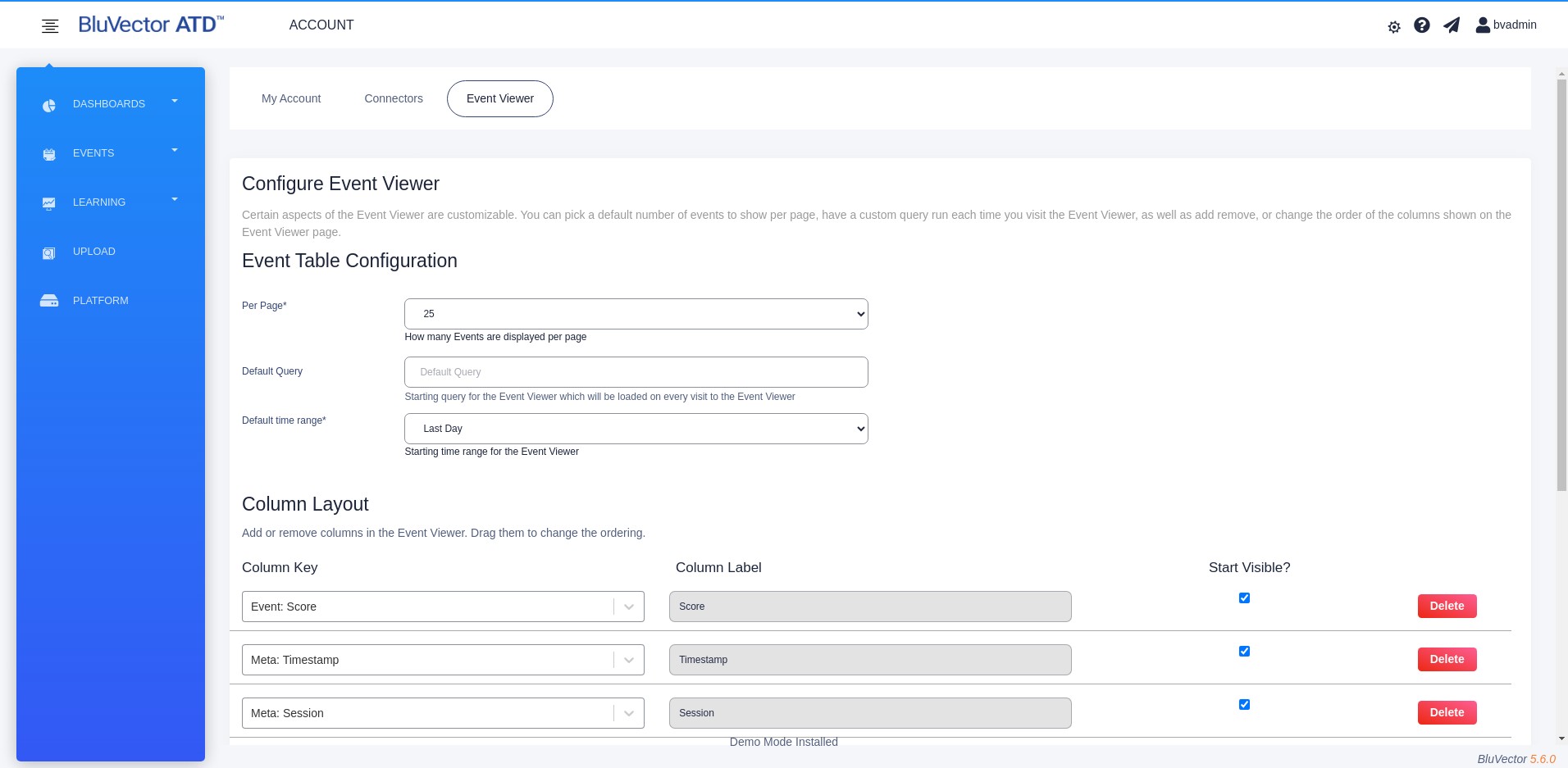

Select Event Viewer. The “Configure Event Viewer” Screen appears (see Figure: Configure Event Viewer Screen).

Fig. 17: Configure Event Viewer Screen

To change the number of events that appear at a time on the screen, select an option for Per Page.

To set a default query to be loaded each time you visit the Event Viewer, enter it in the ‘Default Query’ field.

To set a default time period for the Event Viewer, select ‘Default time range’.

You can show, hide, and add columns.

You can reorder the columns by dragging.

You may select the type of data displayed in each column using the dropdown menu associated with each one. You can also type in the field under Column Key to search for the type of data to display.

To add custom data, type the name of the metadata key in a field under Column Key. You can then change the column header for displaying the data by editing the corresponding field under Column Label.

Select Update. The configuration changes become effective. These changes will persist when the Event Viewer is reloaded.

Procedure: Sort the Columns in the Event Viewer

Follow these steps to temporarily change the sorting order in the Event Viewer:

Log in to the ATD GUI (see Section: Logging into the ATD GUI).

Select EVENTS in the menu on the left.

Select EVENT TABLE from the submenu. The Event Viewer appears. (see Figure: Event Viewer).

To change the sorting order of the data in the Event Viewer, select the arrow next to the TIMESTAMP column header. This will sort the events in either ascending or descending order, based when the BluVector Sensor processed the event.

Searching Events with Queries

You can search for events using queries. You may enter the queries into the autocomplete search bar near the top of the Event Viewer to display results in the Event Viewer. The queries can be saved for reuse.

The metadata fields are all lowercase to make queries as easy as possible.

In the schema, each level is indented from the previous level. When you create a query, you will represent the indentation hierarchy with a period separating the levels of key field parameters. For example, files. analysis.hector.confidence starts with files as the first level, followed by a period, and then analysis as a subfield of files, and so on.

The flags key field is particularly important. The strings returned by the flags key identify which analyzer flagged the file, based on the flagging criteria defined for the analyzer (see Section: Configuring BluVector Sensor Analyzers for more information). Any of the flagging criteria can flag the same pieces of content. It is not uncommon to see a list of identifiers in the same flags key.

For a complete list of queryable fields and a description of each, click within the search bar in the Event Viewer. Select Query Syntax Help. A quick reference chart will appear. In this quick reference, the Query Schema and Operations tabs will display information about the schema and how to query for different fields and values. Expand each category to see more information. The references are dynamically generated based on what is currently available in the system. Also see Section: Understanding Data Schemas for more reference information.

The following table provides some example queries that are based on the Python Query Language (PQL) syntax.

Query Name | PQL Query Syntax |

|---|---|

Flagged by analyzer | files.flags!=None |

Hector potentially malicious | files.flags==”hector” |

Password-protected zip archive | files.analysis.extractor.result.msg==”Password protected” |

Hector suspicious PDF | files.flags==”hector” and files.filetype==”pdf” |

Hector suspicious PE32 | files.flags==”hector” and files.filetype==”pe32” |

ClamAV trojan | files.analysis.clamav.result.stream==regex(“Trojan”) |

Intelligence hit | intel!=None or files.intel!=None |

The following sections provide more information about using queries. Also see Section: Changing the Status of Events and Files for how to change the status of events and files after reviewing them.

Viewing Example Queries by Use Case

This section provides examples of how queries can be constructed for specific use cases. Consider that you want to query about a website or a sending mail server hostname:

Query Use Case | Query Example |

|---|---|

Just the hostname | meta.host=="www.acme.com" |

Not the hostname | meta.host!="www.acme.com" |

Using a regex to search for NOT a hostname | meta.host==regex(".*(?<!acme.com)$") |

Suspicious and malicious events with a whitelisted host | status>=2 and meta.host==regex(".*(?<!mcafee. com)$") |

The Not Equal To operator (!=) may not be used in conjunction with a regular expression. Instead, the regular expression itself must handle the exclusion.

Event status may be queried as either a string or as a numeric value. Trusted (0), Info (1), Suspicious (2) and Malicious (3) are available to make it easier to search for events above or below a specific status type by using numeric comparators (>, <, >=, <=).

Here are additional query use case examples:

Query Use Case | Query Example |

|---|---|

Suspicious WordPress content | status=="suspicious" and meta.headers.url==regex("wp-content") |

Ignore specific content-types (for example, SilverLight) | meta.headers.content-type!="application/ x-silverlightapp" and meta.headers.content-type!="x-silverlight-app" |

Files not in a list of particular types | files.ftype not in ["pdf","cdf","ooxml"] |

Ignore common top-level domains (TLD) | meta.host==regex(".*(?!.(com|mil|org|net))$") |

Suspicious files over HTTP where files are not PDF or CDF and not from a common TLD | status=="suspicious" and meta.app == "http" and files.ftype not in ["pdf", "cdf"] and meta.host==regex(".*(?<!.(com|mil|org|net))$") |

Executables over SMTP email | files.analysis.hector.result.filetype== regex("pe32") and status!="trusted" and meta.app=="eml" |

Source IP is one of certain addresses | meta.src in ["84.120.132.174", "95.150.199.54"] |

Entering a Query into the Search Bar

Before you run a query, you may want to filter the events through PinPoint, so that you can query a more select group of events (for more information, see Section: Sorting with PinPoint.)

The following procedure describes how to enter a query into the search bar in the Event Viewer.

Procedure: Query for Events

Follow these steps to enter a query for events:

Navigate to the Event Viewer (see Section: Using the Event Viewer).

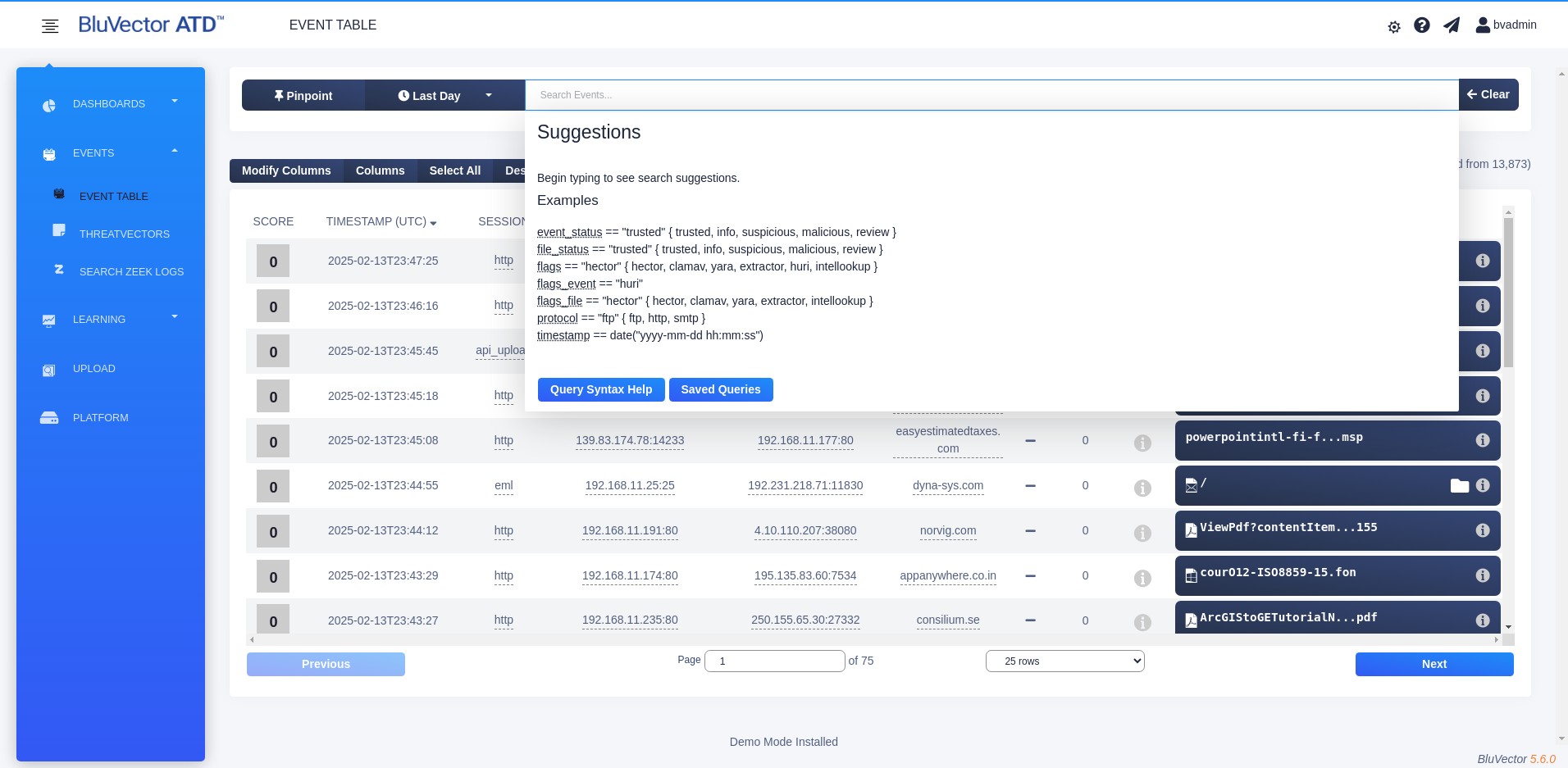

Click within the search bar. The Event Queries autocomplete menu appears, which provides suggestions to automatically complete your queries as you type (see Figure: Search Bar with Event Queries Autocomplete Menu). You can also access syntax help and saved queries from this window.

Fig. 18: Search Bar with Event Queries Autocomplete Menu

You can run a query in one of these ways:

You can type a query in the search bar.

You can run a previously saved query.

You can select the underlined information in the Event Viewer and choose from a menu to generate a query. This will open a new Event Viewer and automatically fill in the search bar with the query. For more information about underlined fields, see Section: Using Field Enhancers.

To start a new query, enter the field names into the search bar that you would like to query for, based on the schemas described in Section: Understanding Event Schema.

For example, if you want to search for files with a Suspicious status, enter: files.status == "suspicious".

When you begin typing, the autocomplete menu will suggest possible keys. For certain keys, it will also suggest the values that you can use to complete the query.

You can also refer to the quick reference chart, which appears when you select Query Syntax Help. It provides information about the syntax and which fields you can query. The references are dynamically generated based on what is currently available in the system.

Here are some tips for typing queries in the search bar:

files queries start with “f”; type f for the autocomplete menu to show available queries for the files key.

meta queries start with “m”; type m for the autocomplete menu to show available queries for the meta key.

intel queries start with “i”; type i for the autocomplete menu to show available queries for the intel key.

Queries that are searching for a certain value must have a key followed by two equal signs (==) and the value. If the value is a string, it needs to be inside quotation marks. For example, if you want to search for all events with a Suspicious status, you would type status == "suspicious". The quick reference chart lists which fields have string values rather than Boolean or integer values. If you want to search for all events that did not have a Suspicious status, type an exclamation mark instead of the first equal sign, to denote “does not equal”. For example, the query would be status` != `"suspicious".

Refer to the examples earlier in this section and the examples in Section: Understanding Event Schema.

To run a previously saved query, click within the search bar. Select Saved Queries in the autocomplete menu that appears. A list of saved queries pops up. Select the name of the saved query that you would like to run. The query will automatically populate the Event Viewer with the appropriate events.

To run a query from the information in the Event Viewer, find any underlined information that you would like to search for. This information can come from the Event Viewer rows or the Event Details Screen. Select the underlined information, then select Search for this ..... For example, if an event exists with a source IP address of 123.45.6.78, select that value in the Source IP column of that event and then select Search for this source IP. The Event Viewer will populate with events from that source IP address. For more information about underlined fields, see Section: Using Field Enhancers.

After you have entered the query fields, press Enter on your keyboard to run the query. The query will automatically populate the Event Viewer with the related events.

To clear the information from the search bar, select Clear.

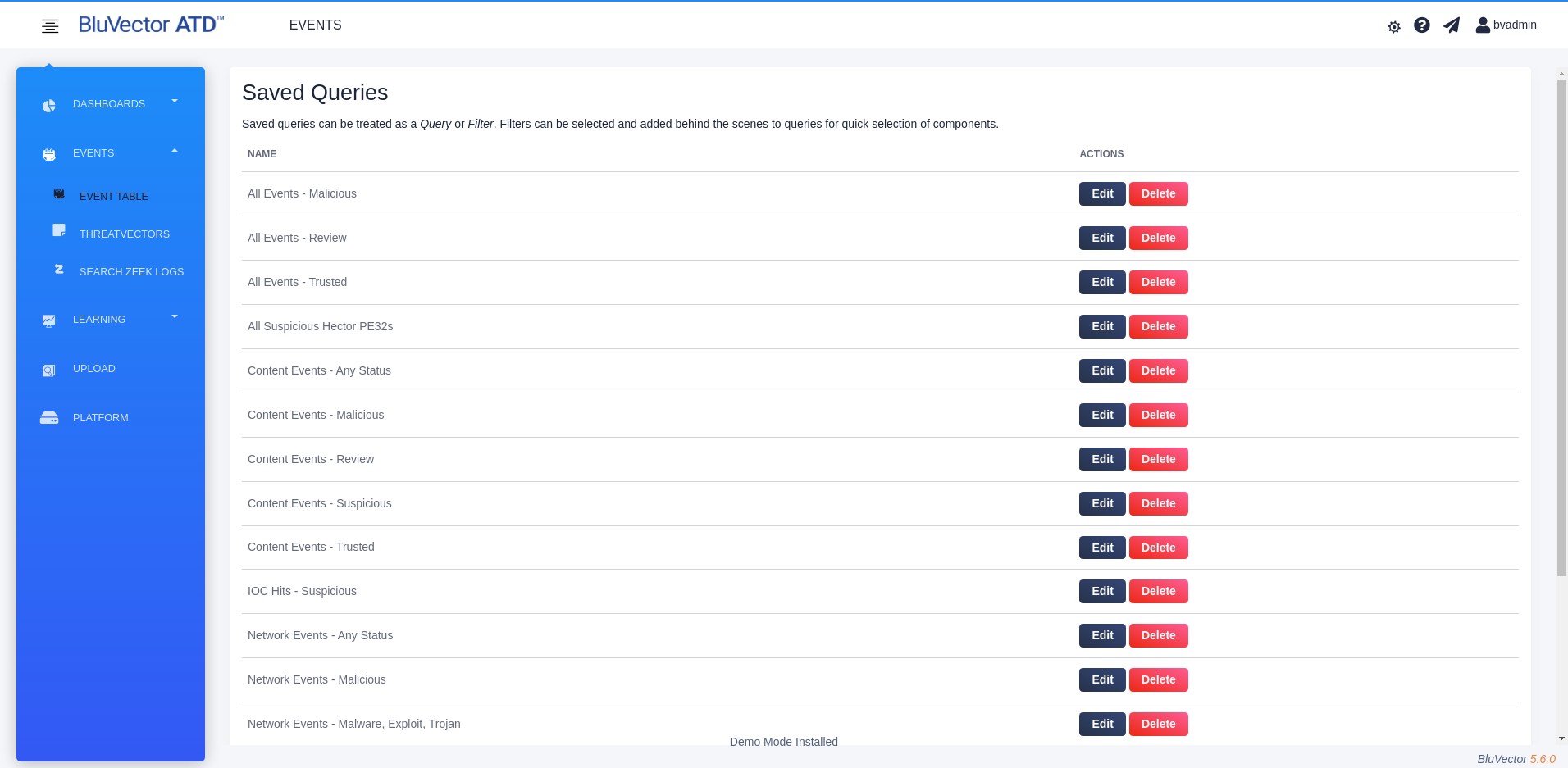

Managing Saved Queries

After you have run a query, you might want to save that particular query so that you can easily use it again later. You also may have a query that you plan to use repeatedly, so you want to be able to access it quickly. You can save a query, and then it will appear in the list of saved queries that can be accessed from the autocomplete menu which appears when you click within the search tab in the Event Viewer.

Before saving a query, it is helpful to test the query by running it, so that you know that it works and will produce the results you are looking for.

The following procedure describes how to save a query for later use.

Procedure: Save a Query

Follow these steps to save a query:

Navigate to the Event Viewer (see Section: Using the Event Viewer).

Click within the search bar. The autocomplete menu appears (see Figure: Search Bar with Event Queries Autocomplete Menu).

You can start saving a query by one of these methods:

If your desired query is already entered in the search bar, select Save Current.

If the search bar is empty and you are ready to create a new saved query:

Select Saved Queries. A list of saved queries pops up.

Select Edit Saved Queries. The “Saved Queries” Screen appears.

Select New Query.

The “Create Saved Query” Screen appears for you to enter the new information.

Enter a name for the query in the ‘Query Name’ field.

The ‘Query’ field might be already filled in (if a query was already entered in the search bar), or it might be blank. If it is blank, enter your query into the Query field.

Select Create to create and save your valid PQL Query. Select Cancel if you decide that you do not want to create the query.

After saving, the “Saved Queries” Screen will appear (see Figure: Saved Queries Screen). It lists all the queries that you have saved.

Fig. 19: Saved Queries Screen

9. To create another query, select New Query and repeat the appropriate steps.

The following procedure describes how to edit a saved query.

Procedure: Edit a Saved Query

Follow these steps to make changes to a saved query:

Navigate to the Event Viewer (see Section: Using the Event Viewer).

Click within the search bar. The autocomplete menu appears (see Figure: Search Bar with Event Queries Autocomplete Menu). Select Saved Queries. A list of saved queries pops up.

Select Edit Saved Queries. The “Saved Queries” Screen appears, listing the saved queries (see Figure: Saved Queries Screen).

Select Edit next to the name of the query that you would like to edit. The “Update Saved Query” Screen appears for you to change the information.

Edit the information in any of the fields (see the previous procedure for saving a query for more information about these fields).

When you have finished editing, select Save changes to save your changes, or select Cancel to discard your changes. You will be returned to the “Saved Queries” Screen.

The following procedure describes how to delete a saved query.

Procedure: Delete a Saved Query

Follow these steps to delete a saved query:

Navigate to the Event Viewer (see Section: Using the Event Viewer).

Click within the search bar. The autocomplete menu appears (see Figure: Search Bar with Event Queries Autocomplete Menu). Select Saved Queries. A list of saved queries pops up.

Select Edit Saved Queries. The “Saved Queries” Screen appears, listing the saved queries (see Figure: Saved Queries Screen).

Select Delete next to the name of the query that you would like to delete. The “Delete Saved Query” Screen appears.

You will be asked to confirm whether you want to delete the query. If you are sure that you would like to delete the query, select Delete. You will be returned to the “Saved Queries” Screen, and the name of the query will no longer be listed.

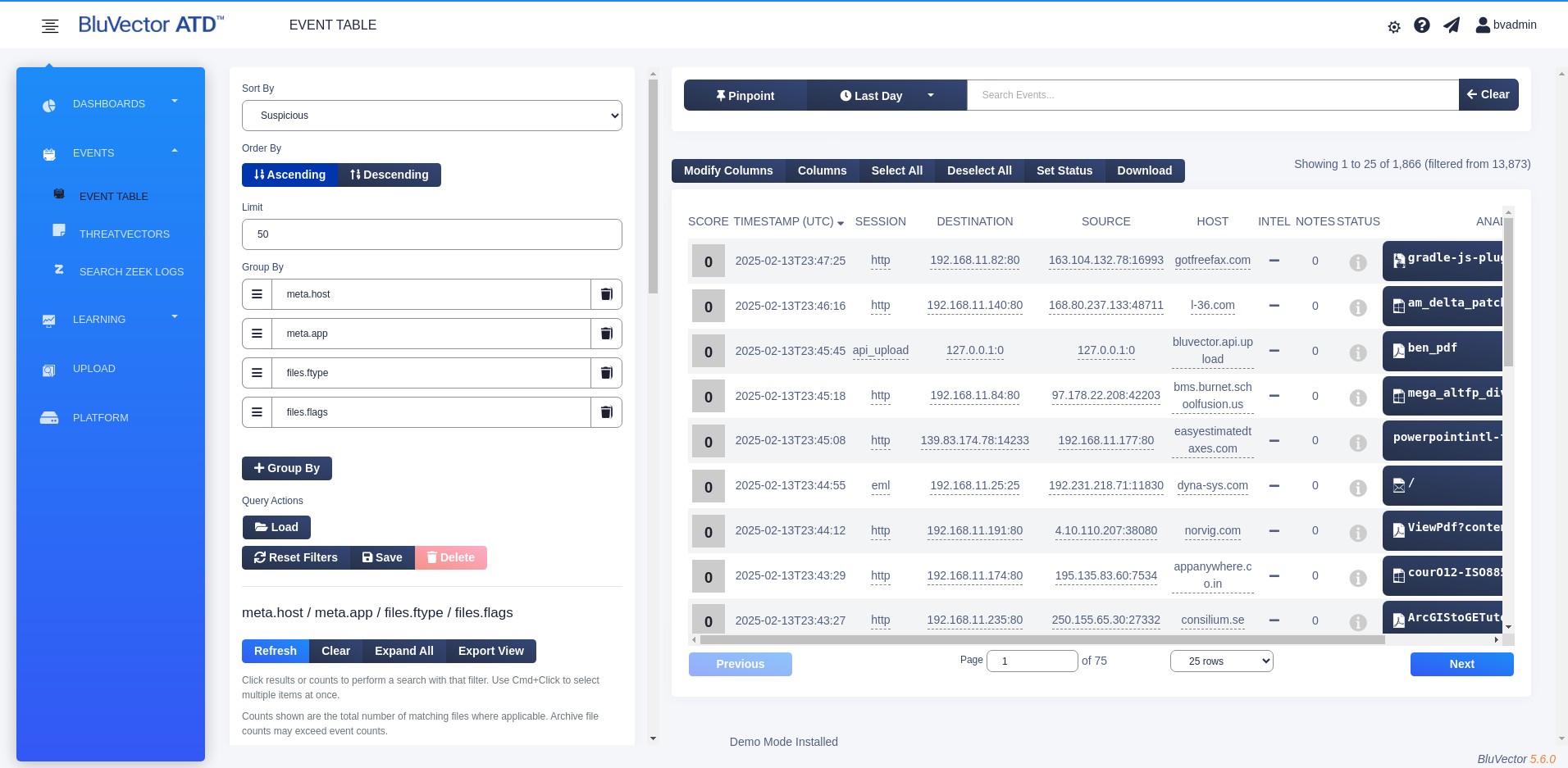

Sorting with PinPoint

The PinPoint tool complements the Event Viewer. It simplifies searching for specific information that would otherwise be challenging to find. PinPoint is especially useful when you must sort through a large dataset to find certain information and to identify anomalies or suspicious network content. For example, you can use PinPoint to filter all but a particular filetype (such as PDF). You can then run a query on just those files to find a particular hostname. Many security analysts prefer using the command-line interface to perform such activities; however, PinPoint provides a faceted search approach allowing analysts to see all available values for the metafield and combine them one at a time.

One of the key fields in PinPoint is Group By. It represents the core feature of PinPoint, because the Group By information helps to narrow the number and types of events that appear. You can add multiple Group By entries to functionally decompose and filter across unique metadata fields. For example, you may want to initially group by protocol, then group by analyzer flags, and then group by host names. If you drill down to the host name level, you can quickly review each of the host names for events over HTTP that were flagged by the Machine Learning Engine.

In the Group By field, you can specify dest, src, meta.dest, and meta.src using CIDR notation to group the results. For example, you could enter dest/8 or meta.src/13. The resulting rows will display the starting IP for each group.

Note:

You can also use CIDR notation in the search bar to filter the events. For example, you can enter dest == "10.0.0.0/8". Also note that although you can use the search bar to query using the ip field with CIDR notation, the ip field is not applicable to the PinPoint feature.

When the search is complete, PinPoint will organize the number of events by their event status (such as Trusted, Info, Suspicious, Malicious, and Review). For example, if you choose Info for Sort By in descending order, your results will show events from all statuses, and will order them according to the attribute that had the most Info events. You can select the plus sign next to an attribute to drill down for more information. You can also select any of the numbers to display those events in the Event Viewer.

You may export the PinPoint results by selecting Export View. The results will be exported in a comma-separated value (.csv) format. Only the expanded rows will be included in the export. You may select Expand All prior to export to get complete results. Because PinPoint is a multi-dimensional analysis tool with multiple drill-down options, the exported results must be flattened to accommodate the .csv format.

The following procedures describe how to use PinPoint to sort information, as well as how to save and load search criteria.

Procedure: Sort Information with PinPoint

Follow these steps to sort information with PinPoint:

Navigate to the Event Viewer (see Section: Using the Event Viewer).

Select Pinpoint. The “PinPoint Query” Screen appears. (see Figure: PinPoint Query Screen).

Fig. 20: PinPoint Query Screen

The time frame and query for the PinPoint results can be controlled by search bar entries (see Section: Searching Events with Queries).

From the ‘Sort By’ menu, select which status you would like to use to sort the information.

Select Ascending or Descending for ‘Order By’ to determine whether to view the events in ascending or descending order.

Set a limit to the number of unique items displayed for the first ‘Group By’ field by entering a number for ‘Limit’.

In the ‘Group By’ field, type the attribute by which you want to sort the data. As you start typing, the autocomplete feature will display possible attributes that you could use. See Section: Sorting with PinPoint for more details about the ‘Group By’ field.

For a complete list of queryable fields and a description of each, click within the search bar. Select Query Syntax Help. A quick reference chart will appear. In this quick reference, the Query Schema and Operations tabs will display information about the schema and how to query for different fields and values. Expand each category to see more information. The references are dynamically generated based on what is currently available in the system.

To add additional ‘Group By’ fields, select + Group By and type the appropriate information in the new fields.

To delete a ‘Group By’ field, select the trash can to the right of the field.

You can reorder the ‘Group By’ fields by dragging the left side of the fields.

To run the PinPoint search, select Go. The results will appear below the PinPoint fields, on the left side of the screen.

You can select any of the results to have the tool automatically build your query and display those events in the Event Viewer.

You can select multiple rows in the PinPoint results to filter the results in the Event Viewer. Use the keyboard and mouse sequence for your computer to perform multi-selection. For example, on a Mac computer, use Cmd+Click.

10. To close PinPoint, again select Pinpoint near the search bar.

Procedure: Save a PinPoint Search

Follow these steps to save a set of PinPoint criteria for a later search:

Complete a PinPoint search, as described in the previous procedure.

Select Save.

In the window that appears, type a name for the search you are saving.

Select Create Query.

Procedure: Load a Saved PinPoint Search

Follow these steps to load and run a previously saved PinPoint search:

Navigate to the Event Viewer (see Section: Using the Event Viewer).

Select Pinpoint. The “PinPoint Query” Screen appears. (see Figure: PinPoint Query Screen).

Select Load. A menu appears.

Choose a saved search from the menu. These searches are either default search options or searches that any user has previously saved.

After you select a search, the PinPoint fields will automatically populate.

To run the search, select Go.

Using the Event Details Screen

To view detailed event and file metadata as well as automated correlations, bring up the “Event Details” Screen by following these steps:

Log in to the ATD GUI (see Section: Logging into the ATD GUI).

Select EVENTS in the menu on the left.

Select EVENT TABLE from the submenu. The Event Viewer appears (see Figure: Event Viewer).

Select an item under the ANALYSIS column in the row for the event and files you wish to review. A popup window appears, displaying the “Event Details” Screen (see Figure: Event Details Screen).

Fig. 21: Event Details Screen

Fig. 21: Event Details Screen

To close the Event Details Screen popup:

Select the X button at the top right of the popup window.

Select the area outside of the popup window.

Press the Esc key.

Note:

If you select the EVENT ID link, the information from this popup view will load into a full screen. This is useful because you can open the link in a new tab in order to view the information at a later time and continue browsing other events. To do this, right-click the link and select Open Link in New Tab. You can also email this link to other analysts if you would like for them to look at the event information in a full screen.

The “Event Details” Screen includes automated correlations to other detection events as well as to network metadata (Zeek logs).

Event correlation is generated when you open the “Event Details” Screen and is effective for events occurring within five minutes of the event being viewed.

Network metadata correlation occurs at the time of the event creation and is effective for a user-configurable time window of up to 15 minutes surrounding the event.

The top of the “Event Details” Screen displays an icon representing the event status, followed by icons for the event analyzers that hit.

Information about the event is displayed in the Event Metadata section on the left side. Details include event ID number, time of the event, source IP/IPv6 and port, destination IP/IPv6 and port, geolocation, host name, and the session (for example, HTTP). Next to the destination and source IP addresses, a flag may appear for the country associated with the event. You can move the mouse cursor over a flag to see the name of the country.

You can select fields that are underlined for more options (see Section: Using Field Enhancers).

You can take further action by selecting from the dropdown options at the top of the screen:

Submit – Select this option to instantly forward the content to already-configured post analyzers, or to go the configuration screen to add more post analyzers. (See Section: Configuring Post Analyzers for more information.)

Connectors – Select this option to navigate to external URLs using the current content metadata. (See Section: Configuring Connectors for more information.)

Download – Select this option to download event and file metadata. (See Section: Downloading Files and Data for more information.)

Set Status – Select this option to change the status of the event, which is distinct from the status of its files. A dropdown menu of the status options appears (see Figure: Changing Status of Event on Event Details Screen). Select the icon and option in the dropdown menu that represents the desired status for marking the event. For additional information on changing the status of an event or file, see Section: Changing the Status of Events and Files.

If there is a Hunt Score, the Hunt Score section shows a breakdown of the event score. Each scoring factor is listed, along with its contribution to the total score.

The Overview section for the event displays details about BluVector analysis performed on the event metadata, such as hURI hostname analysis results.

A graph displays the number of various filetypes seen for this host in the last 30 days for both flagged and unflagged content. You can move the mouse over the graph to see the number of files for a particular filetype within the time period.

Any status changes made to this event will appear in the Status Changes section, listed with the date, the user who made the change, and the change in status.

Fig. 22: Changing Status of Event on Event Details Screen

Fig. 22: Changing Status of Event on Event Details Screen

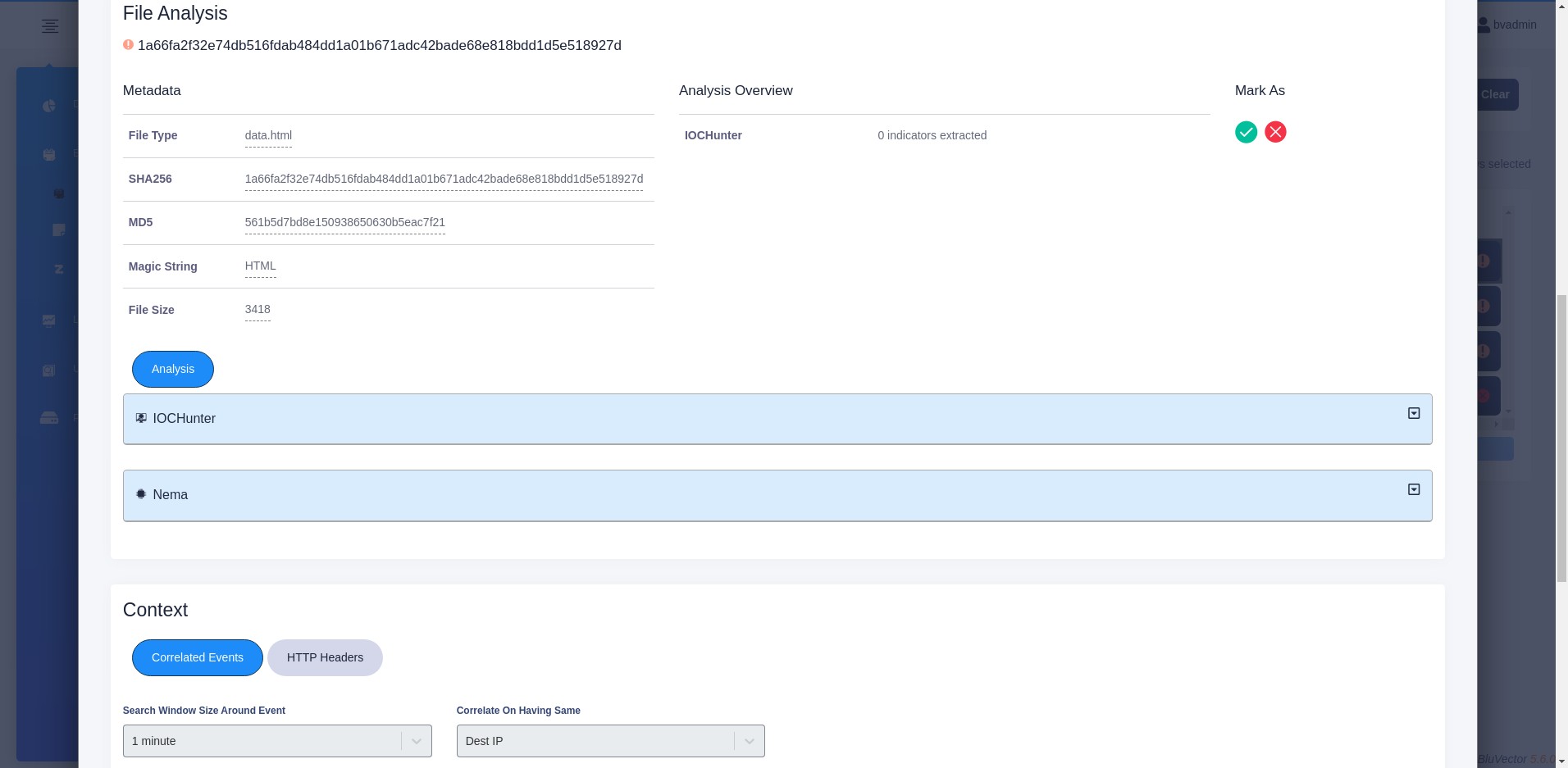

Viewing the File Analysis Section

For events that have files associated with them, the File Analysis section provides information about the files.

If the file is an archive, you can select any of the extracted files from the Choose File Content dropdown menu. In the menu, the file status icon is displayed next to the filename, as well as an icon for the tool that flagged the file. After selecting a file, the information for that file will be loaded into the view.

The metadata for the currently selected file is shown. Details include the filename, type, size, as well as the MD5 and SHA256 hashes.

The file Overview section lists which analyzers processed the file and a summary of the results.

You can mark the status for the selected file by selecting the green checkmark for Trusted or the red X for Malicious. For additional information on changing the status of an event or file, see Section: Changing the Status of Events and Files.

The Analysis tab shows expandable results of the file analyzers. Each analyzer that reviewed the file will have its own section of information. For example:

The Yara section will show which rule the file matched, the owner, the namespace, and any tags.

The ClamAV section lists the signature of the file.

The Hector section displays a graph of the Machine Learning Engine confidence level and the threshold for the file.

The NEMA section shows information about any detected shellcode or obfuscated JavaScript.

If the file is an archive and was analyzed by the Extractor Analyzer, the decompressed contents will be listed under Extractor.

The Post Analysis tab shows a summary if any post analyzers are configured (see Section: Configuring Post Analyzers) and their analyses are completed. Post analyzer analyses can take between five seconds to several days to complete. You can expand a section to see more information. Typically, the summary will include the name of the post analyzer, the likelihood of the analyzed content being malicious, and more details of the analysis.

BluVector provides dynamic post analysis using a cloud-based sandbox called BluVector Dynamic Malware Analysis in the Cloud. See Section: Learning about BluVector Dynamic Malware Analysis in the Cloud to learn more about BluVector Dynamic Malware Analysis in the Cloud.

If a threat intelligence provider has flagged the event as being suspicious, then an Intelligence tab will appear. This tab appears only if threat intelligence providers have been configured (see Section: Configuring Threat Intelligence Providers). Intelligence analysis information is available immediately, since the correlation is done on the BluVector Sensor. Select the tab for more detailed information about what the threat intelligence analyzer discovered.

The Hexdump tab shows the raw binary representation of the file, along with an ASCII interpretation of the raw byte sequence. You can choose to see the Head or Tail, and you can download the file for further review.

The Submit to BV tab allows you to send a summary of the event and file content to the BluVector threat intelligence team. You will then receive guidance on the appropriate adjudication for the content.

Viewing the Event Analysis Section

The Event Analysis section contains an Analysis tab. The hURI Analyzer results appear in this tab. It uses machine learning techniques to detect potentially malicious URIs. The hURI row can be expanded to show more information, such as the normalized URL and confidence level. For information on how to configure the hURI Analyzer, see Section: Configuring the hURI Analyzer.

Viewing the Context Section

The Context section provides additional details about how the event relates to other events. The HTTP Headers tab displays relevant network application layer headers, if they are available.

The Correlated Events tab displays a table of the related events, showing the timestamp, event ids, scores, hostnames, and flagging analyzers. To change the time range and matching metadata, select choices in the Search Window Size Around Event dropdown and the Correlate On Having Same dropdown. The table will update to show the new context for this event.

The HTTP Headers Tab provides information about the connection.

Using the Notes Section

You may add a note about this event in the Notes section. The note will apply to the event as a whole. To add a new note, select Add. Notes are viewable by all users.

Changing the Status of Events and Files

In BluVector, events and files have associated statuses that reflect the level of suspicion (see Section: Using the Event Viewer for more information about viewing events, files, and status). As you investigate events and files, you can change their status to reflect what you have found.

Note:

Individual files may have their own statuses, which are distinct from the event status.

If a file status is set to Malicious, its associated event status will automatically change to Malicious, because the event has at least one malicious component. Setting a file status to Trusted does not affect the event status, since malicious events may still contain clean files.

Setting an event status to Malicious does not affect the status of its files, because the files themselves may still be benign. Setting an event status to Trusted changes the file status of all associated files to Trusted as well. That is because an event that is wholly Trusted implies that all files within it are benign as well.

The following procedures describe how to change the status for an event and for a file, as well as for multiple events.

Procedure: Change the Status of an Event

Follow these steps to change the status of an event:

Note:

There is no option to undo a change once you have changed the status of multiple events. You can only change the status back by following the steps in this procedure again. Also, be aware that when you change the status of an event in the Event Viewer, other users will be able to see that the status of the event has changed.

Navigate to the Event Viewer (see Section: Using the Event Viewer).

To change the status of a single event, find the row for that event. Select the icon in the STATUS column. A dropdown menu of status icons then appears. Select the icon in the dropdown menu that represents the desired status for marking the event (for a description of the icons, see Section: Using the Event Viewer). The icon in the event row will eventually change to reflect the new status.

You can also change the status of a single event from the “Event Details” Screen (see Section: Using the Event Details Screen).

To change the status of multiple events at the same time:

Select the rows of the events you would like to change.

Select Set Status in the menu bar above the table of events. A dropdown menu appears (see Figure: Event Status Change Screen).

From the dropdown menu, choose an option beginning with the word Selected that represents the desired status for marking the events. For example, to change the status of the selected events to Review, select Selected Review. The status icons in the selected event rows will eventually change to reflect the new status.

Fig. 23: Event Status Change Screen

To change the status of all the events currently shown on the screen in the Event Viewer:

Select Select All in the menu bar above the table of events. (Note: If you need to deselect all the events, select Deselect All.)

Select Set Status in the same menu bar. A dropdown menu appears (see Figure: Event Status Change Screen).

From the dropdown menu, choose an option beginning with the word Selected that represents the desired status for marking the events. For example, to change the status of the selected events to Suspicious, select Selected Suspicious. The status icons in the selected event rows will eventually change to reflect the new status.

To change the status of all the events that appeared as the result of running a query:

Select Set Status in the menu bar above the table of events. A dropdown menu appears (see Figure: Event Status Change Screen).

From the dropdown menu, choose an option beginning with the word Results that represents the desired status for marking the events. For example, to change the status of all the query results to Suspicious, select Results Suspicious. A window will appear stating how many events your change will impact.

Select Confirm to continue. The status icons of the events in the query will eventually change to reflect the new status.

Procedure: Change the Status of a File

Follow these steps to change the status of a file:

Navigate to the Event Viewer (see Section: Using the Event Viewer).

Select the data in the ANALYSIS column for the desired file. The “Event Details” Screen appears (see Figure: File Details of an Event with Status Change Options).

Fig. 24: File Details of an Event with Status Change Options

If the file is in an archive, selected the desired file in the Choose File Content dropdown menu.

Select the green checkmark to mark the file Trusted or select the red X to mark the file Malicious. The icon of the file will eventually change to reflect its new status.

Using Field Enhancers

In many views, you can select a field that has a dotted underline in order to perform additional tasks. A context-driven menu will appear, called a field enhancer. The menu choices will be based on the data in the selected field. Examples of menu choices include:

Searching for all events that have the same data in this field.

Searching for all events with the same combination of data in certain fields for this event.

Adding a workflow based on this data.

Finding all events created from a similar source.

You can find fields with dotted underlines on these screens:

Section: Using the Event Viewer

Section: Using the Event Details Screen

Understanding Data Schemas

BluVector uses a variety of data models, or schemas, to capture information related to network events, files carved from the network and intelligence about potential threats to an enterprise. These schemas are used to form queries and define routing criteria throughout the system.

See the following sections for more information on the various data schemes:

Section: Understanding Event Schema

Section: Understanding Files Schema

Section: Understanding Meta Schema

Section: Understanding Intel Schema

Understanding Event Schema

In order to perform the queries for files or events as described in Section: Viewing Event Information, it is helpful to know how BluVector stores the data. To see a complete list of queryable fields and information, bring up the Event Viewer and click within the search bar (see Figure: Event Viewer). Select Query Syntax Help. A window appears showing a query syntax reference guide. You can select the Query Schema and Operations tabs for information about the schema and how to query for different fields and values. The references are dynamically generated based on what is currently available in the system.

Each event within the Event Viewer contains the first level keys shown below, with values that range depending on the event protocol, event intel hits, number of files associated with the event, and software release version.

Some of the most important first and second level keys are:

{

"_id": { "$oid" : "<unique object identifier>" },

"release": "<release number>", // Dotted BV Release string, i.e "1.5.0"

"status": 0 - 3, // 0 = Trusted, 1 = Info, 2 = Suspicious and 3 =

𝜔→Malicious

"score": <float>,

"meta": { key,value pairs... },

"files": [ {file1}, {file2} ],

"intel": [ {intel1}, {intel2} ],

"analysis": {

"huri": { dict - key, value pairs },

"suricata": { dict - key, value pairs },

}

}The files key is a list of dictionaries, where each dictionary represents the analysis of one file. There can be several files for one event.

The intel key works similarly, where any number of threat intelligence providers can hit on one event. The intel key is also a list of dictionaries, where the analysis from each intel provider is stored in its own dictionary.

The analysis key displays analysis information generated from analyzers that operate at the event level. The only analyzer that subscribes to events (rather than subscribing to files/content) is hURI. For a full description of BluVector analyzers, see Section: Configuring BluVector Sensor Analyzers.

The meta key is a dictionary containing event metadata (such as IP addresses, hostnames, ports), as well as all of the information provided in the protocol headers.

The release key identifies which version of software was running at the time the event was generated. This ensures consistency between software releases, since the schema may change.

The following table describes certain keys:

Event(s) Key Name | Key Description |

|---|---|

_id | Unique identifier |

release | BluVector release version string in ‘x.x.x’ format |

meta | Metadata dictionary for the event being processed (such as protocol headers) |

files | List of one or more dictionaries containing file analyses |

intel | List of one or more dictionaries containing intelligence analyses |

Understanding Files Schema

As described in Section: Understanding Event Schema, the files list contains complete analysis information for each individual file that is analyzed. The files schema is made up of the following key-value pairs:

"files": [

{

"fname": "<filename string>",

"filetype": "<libmagic filetype description>",

"analysis": {

"yara": { dict - key, value pairs },

"clamav": { dict - key, value pairs },

"hector": { dict - key, value pairs },

"nema": { dict - key, value pairs },

"iochunter": { dict - key, value pairs },

"intellookup": { dict - key, value pairs },

"extractor": { dict - key, value pairs },

"ftype": "<short filetype string>”,

"filesize": <int filesize in bytes>,

"sha256": <sha256 hash string>,

"md5": <md5 hash string>,

"intel": "<intel hits on file metadata>”,

"status": <int representing file status>,

"post_analysis": <int representing post analysis results>,

"flags": [<analyzer(s) that have flagged a file as suspicious>] }

},

{ other file if present .... }

]The following table describes each of the possible key-value pairs within a file analysis:

File(s) Key Name | Description |

|---|---|

files.fname | File name string |

files.ftype | Short filetype string classification |

files.filetype | Filetype classification from libmagic |

files.filesize | Size of the file in bytes |

files.flags | List of analyzers that have flagged the file |

files.analysis | Dictionary of analysis results for each analyzer |

files.md5 | 128-bit hash of the file |

files.sha256 | 256-bit hash of the file |

The files.analysis key is used in conjunction with each of the analyzers built into the system (for example, files. analysis.hector). The value from each analyzer is a dictionary containing its respective analysis result. For more information, see the following table.

File(s) Analysis Key | Description |

|---|---|

files.analysis | “yara”: { key, value dictionary } |

files.analysis | “clamav”: { key, value dictionary } |

files.analysis | “hector”: { key, value dictionary } |

files.analysis | “nema”: { key, value dictionary } |

files.analysis | “iochunter”: { key, value dictionary } |

files.analysis | “intellookup”: { key, value dictionary } |

files.analysis | “extractor”: { key, value dictionary } |

files.analysis | “huri”: { key, value dictionary } |

The following tables describe the schema for the analyzers in more detail.

The Yara analyzer schema is as follows:

Yara Analyzer Keys | Description |

|---|---|

files.analysis.yara | { key, value dictionary } for Yara |

files.analysis.yara.elapsed_time_ms | An integer representing analysis time in milliseconds |

files.analysis.yara.result.namespace | Source of the yara rules file |

files.analysis.yara.result.rule | Specific rule within the yara rules file that hit on the content |

files.analysis.yara.result.owner | String identifying the owner of the rule |

files.analysis.yara.result.tags | List of identifying Yara rule tag strings |

files.analysis.yara.result.meta | { key, value dictionary } providing additional information |

The ClamAV analyzer schema is as follows:

ClamAV Analyzer Keys | Description |

|---|---|

files.analysis.clamav | { key, value dictionary } for ClamAV |

files.analysis.clamav.elapsed_time_ms | An integer representing analysis time in milliseconds |

files.analysis.clamav.result.stream | String that identifies the signature matching the file |

The Machine Learning Engine analyzer schema is as follows:

Machine Learning Engine Analyzer Keys | Description |

|---|---|

files.analysis.hector | { key, value dictionary } for Hector |

files.analysis.hector.elapsed_time_ms | An integer representing analysis time in milliseconds |

files.analysis.hector.result.confidence | A float value that indicates the confidence level of the analyzed file |

files.analysis.hector.result.threshold | A float value that indicates the threshold confidence level at which the file will be flagged |

files.analysis.hector.result.filetype | String identifying the filetype |

files.analysis.hector.result.parsers | Extensive filetype-specific metadata generated about the file used as features for the machine learning models |

The NEMA Speculative Code Execution Engine analyzer schema is as follows:

NEMA Analyzer Keys | Description |

|---|---|

files.analysis.nema | { key, value dictionary } for NEMA |

files.analysis.nema.elapsed_time_ms | An integer representing analysis time in milliseconds |

files.analysis.nema.result.shellcode | { key, value dictionary } for the shellcode engine |

files.analysis.nema.result.shellcode.info. total_emulated_instructions | An integer value that indicates the total number of emulated instructions |

files.analysis.nema.result.shellcode.info. instruction_chains_found | An integer value that indicates the number of instruction chains found |

files.analysis.nema.result.shellcode.info. heuristic_hits | List of shellcode heuristic hits |

files.analysis.nema.result.shellcode.hits | { key, value dictionary } providing additional information for any heuristic hits |

files.analysis.nema.result.shellcode. num_interesting_chains | An integer value that indicates the number of interesting execution chains detected |

files.analysis.nema.result.shellcode.inter esting_chain_info | List of integer values representing the offset of the interesting execution chains |

files.analysis.nema.result.shellcode.alerts | List of shellcode analysis alerts used for flagging |

files.analysis.nema.result.javascript | { key, value dictionary } for the JavaScript engine |

files.analysis.nema.result.javascript.confid ence | A float value that indicates the confidence level of the analyzed javascript |

files.analysis.nema.result.javascript. threshold | A float value that indicates the threshold confidence level at which the javascript will be flagged |

files.analysis.nema.result.javascript.info. suspicious_string_entropy | A boolean value that indicates if strings are detected with suspicious entropy |

files.analysis.nema.result.javascript.info. js_bad_practices | List of bad practices for non-obfuscated JavaScript |

files.analysis.nema.result.javascript.info. suspicious_variable_names | A boolean value that indicates if suspicious variable names are detected |

files.analysis.nema.result.javascript.info. obfuscated_variable_names | A boolean value that indicates if obfuscated variable names are detected |

files.analysis.nema.result.javascript.info. excessive_variable_updates | A boolean value that indicates if excessive variable updates are detected |

files.analysis.nema.result.javascript.info. obfuscated_url_protocols | List of obfuscated URL protocols |

files.analysis.nema.result.javascript.info. obfuscated_js_bad_practices | List of obfuscated sensitive JavaScript keywords |

files.analysis.nema.result.javascript.info. obfuscated_sensitive_js_keywords | An integer value that indicates the number of obfuscated sensitive JavaScript keywords observed |

files.analysis.nema.result.javascript.info. obfuscated_js_reserved_words | List of obfuscated JavaScript reserved words |

files.analysis.nema.result.javascript.info. eval_count | An integer value that indicates number of times JavaScript eval function is used |

files.analysis.nema.result.javascript.info. string_modification_function_count | An integer value that indicates number of string modification functions used |

files.analysis.nema.result.javascript.info. avg_length_of_strings | A float value that indicates average length of all the strings in JavaScript |

files.analysis.nema.result.javascript.info. max_length_of_strings | An integer value representing length of the largest string in JavaScript |

files.analysis.nema.result.javascript.info. dom_modification_function_count | An integer value that indicates number of DOM modification functions used |

files.analysis.nema.result.javascript.info. CreateObject_ActiveXObject_count | An integer value that indicates number of times CreateObject or ActiveXObject function is used |

files.analysis.nema.result.javascript.info. keyword_count | An integer value that indicates number of times reserved keywords are used |

files.analysis.nema.result.javascript.info. string_having_iframe_count | An integer value that indicates the number of times iframe is present in the strings |

The Extractor analyzer schema is as follows:

Extractor Analyzer Keys | Description |

|---|---|

files.analysis.extractor | { key, value dictionary } for Extractor |

files.analysis.extractor.elapsed_time_ms | An integer representing analysis time in milliseconds |

files.analysis.extractor.result.msg | String that provides miscellaneous information |

files.analysis.extractor.result.files | List containing information about extracted resources (filename and sha256 hash) |

The following files key fields are most useful for querying events, as described in Section: Viewing Event Information:

‘files.analysis.clamav.result.stream’

‘files.analysis.hector.result.confidence’

‘files.analysis.nema.result.shellcode.alerts’

‘files.analysis.nema.result.javascript.confidence’

‘files.analysis.yara.result.owner’

‘files.analysis.yara.result.namespace’

‘files.analysis.yara.result.rule’

‘files.analysis.extractor.result.msg’

‘files.ftype’

‘files.filetype’

‘files.filesize’

‘files.fname’

‘files.sha256’

‘files.md5’

‘files.flags’

Viewing a File Schema Example

The general database schema is currently used for each file, as described in Section: Understanding Event Schema. An example of the schema for a file is shown below.

When performing the queries, it is important to notice the case of the letters in the key-value pairs. The key fields in the schema are all purposely lowercase.

{

"status": 1,

"filesize": 15404,

"filetype": "PE32 executable (console) Intel 80386 (stripped to

𝜔→external PDB),

for MS Windows,

UPX compressed",

"analysis": {

"hector": {

"elapsed_time_ms": 7,

"result": {

"confidence": 0.1470792577469633,

"processing_code": 0,

"filetype": "code.pe32.console",

"bundle": "BUN_20151201_0",

"threshold": 0.5,

"model": "CLS_CON_20151107_0"

}

},

"clamav": {

"elapsed_time_ms": 84,

"result": {

"stream": "None"

}

},

"yara": {

"elapsed_time_ms": 3,

"results": []

}

},

"ftype": "code.pe32.console",

"fuid": "F9xSiEqDF5pEytDHi9",

"_cls": "File",

"fname": "109057237.exe",

"flags": [],

"sha256": "615c22efe236f131a7e8cd74877f35eec29e6c4eee006e5efb38b45e6a5c7ce5

𝜔→",

"md5": "7e2d779f77995eb5620934ac1a0d4cb0"

}Understanding Meta Schema

The meta key consists of relevant event-based metadata. It also contains all available information from the protocol headers, if applicable. The meta key will return values similar to the following:

"meta": {

"src": <Source Host Name or IP Address string>,

"src_port": <uint16 port number>,

"dest": <Destination Host Name or IP Address string>,

"timestamp": <datetime string>,

"app": <String: application level protocol>,

"headers": { key, value header dictionary }, // Protocol headers.

"host": <Host Name or IP Address string>, ie"xxx.xxx.xxx.xxx",

"dest_port": <uint16 port number>,

"user": <user name string if known>

}The following table provides a description of each of the possible key-value pairs within the meta key:

Meta Key Name | Description |

|---|---|

meta.app | String representing the application protocol (‘http’, ‘eml’, ‘gui_upload’ or ‘api_upload’) |

meta.collector | Hostname of the system that generated the event. When using an ATD Central Manager, it will be the name of the BluVector Collector that sent the event to the ATD Central Manager. |

meta.dest | String value of the destination IP address |

meta.dest_port | Integer of the destination port |

meta.host | String value of the hostname |

meta.src | String value of the source IP address |

meta.src_port | Integer of the source port |

meta.timestamp | String value of when the event was recorded |

meta.uuid | UUID of the system that generated the event. When using an ATD Central Manager, it will be the name of the BluVector Collector that sent the event to the ATD Central Manager. |

meta.headers | { key, value dictionary } stores protocol-specific metadata, if available |

The following meta key fields can be used to query events as described in Section: Viewing Event Information:

‘meta.app’

‘meta.collector’

‘meta.dest’

‘meta.dest_port’

‘meta.host’

‘meta.src’

‘meta.src_port’

‘meta.timestamp’

‘meta.uuid’

Viewing a Meta Schema Example

The event meta schema identifies the network stream and protocol metadata associated with an event. Here is an example of meta schema captured for an HTTP session.

"meta": {

"timestamp": "2022-01-06T16:15:43.176000",

"dest": "10.12.1.102",

"dest_port": 49192,

"src": "70.39.115.202",

"src_port": 80,

"sfa_elapsed_time_ms": 550,

"host": "huqk.lb590wedo.top",

"uuid": "ab690a4b4d614e279a3bed5e7082b437",

"collector": "lkilberg-s420-38.bah",

"email_body": {},

"in_iface": "",

"event_type": "alert",

"flow_id": 769449546505397,

"transport": "TCP",

"pcap_id": "953b8cbc-6f0b-11ec-b6c9-0242ac11000b",

"pcap_name": "5a125eb6421aa91d02ba78fc-NEMA-huqk.lb590wedo.top.pcap",

"extra_info": "[_PID=16354 _UID=0 _GID=0 _COMM=Suricata-Main

_EXE=/usr/sbin/suricata _CMDLINE=\"/usr/sbin/suricata -l

/var/bluvector/upload/953b8cbc-6f0b-11ec-b6c9-0242ac11000b/suricata -k none -r

/var/bluvector/upload/953b8cbc-6f0b-11ec-b6c9-0242ac11000b/tmp1hx5a333.pcap

--user=0 --group=0 --set outputs.1.eve-log.prefix=PCAPPREFIX:{\\\"pcap_id\\\":

\\\"953b8cbc-6f0b-11ec-b6c9-0242ac11000b\\\", \\\"pcap_name\\\":

\\\"5a125eb6421aa91d02ba78fc-NEMA-huqk.lb590wedo.top.pcap\\\"}# \"]",

"matched_rule_action": "status-info",

"matched_rule_query": "suricata!=None",

"matched_rule_description": "Setting suricata hits to info"

}Understanding Intel Schema

The intel key is a list of key-value pairs mapping the intelligence providers that hit on event metadata to their respective analysis of that event. The intel key will return values similar to the following:

{

intel : [

'<provider1>': { key, value intel_dict } ,

'<provider2>': { key, value intel_dict }

]}Downloading Files and Data

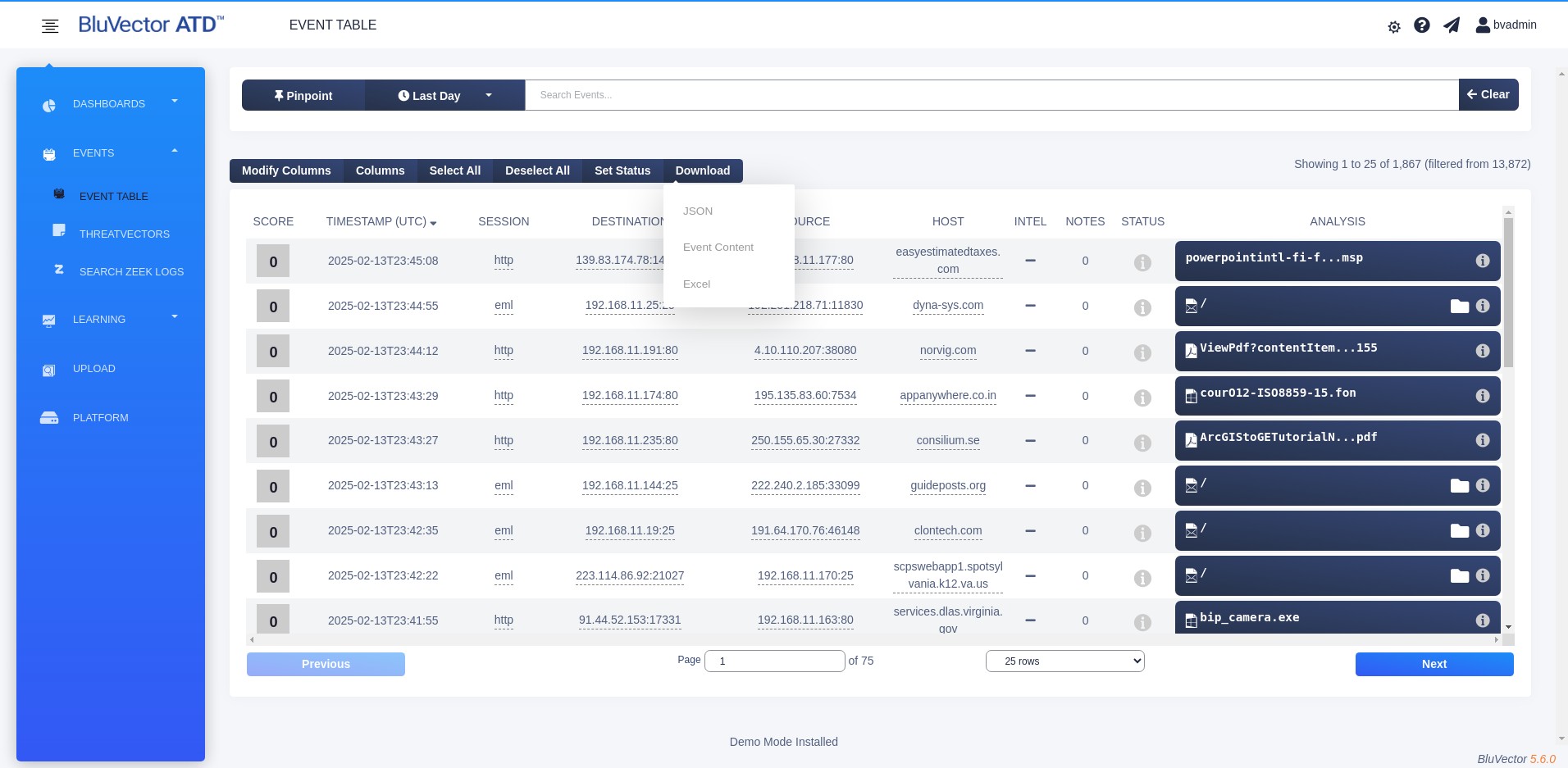

After you have examined files and events in the Event Viewer (see Section: Using the Event Viewer) or “Event Details” Screen (see Section: Using the Event Details Screen), you might want to download the raw data about an event, or even the suspicious file itself. You can download event metadata in raw JSON or Microsoft Excel format for archiving, sharing with other analysts, and auditing. You can also download flagged content to a password-protected zip file, to prevent mishandling of the suspicious content until it is decompressed in an appropriate environment. Additionally, you can download any Targeted Logger information that was collected for the event.

Note:

A file will only be available for download if an analyzer flags it as being suspicious. The flagging criteria are critically important because they determine whether or not file content will be saved to disk. (See Section: Configuring BluVector Sensor Analyzers for more information about configuring analyzers.)

For more information about interpreting the raw data schema, see Section: Understanding Data Schemas.

The following procedures describe how to:

Download raw JSON-formatted data

Export metadata to an Excel file

Download the actual suspicious content

Download Targeted Logger information

To begin any of these procedures, first:

Navigate to the Event Viewer (see Section: Using the Event Viewer).

Select the row(s) of the event(s) for which you would like to download the file(s).

Fig. 25: Download Options Menu in the Event Viewer

Continue by following the appropriate procedure below.

Procedure: Download Raw JSON-Formatted Data

Raw event data in JSON format will have a hierarchical structure. Follow these steps to download data about an event in raw JSON format:

Select Download above the table. The Download Options Menu appears (see Figure: Download Options Menu in the Event Viewer).

From the menu, select JSON.

Procedure: Export Metadata to an Excel File

An Excel file with raw data and other information has a linear format. Follow these steps to export metadata to an Excel file:

Select Download above the table. The Download Options Menu appears (see Figure: Download Options Menu in the Event Viewer).

From the menu, select Excel.

Procedure: Download a Suspicious File

Follow these steps to download the actual content for a suspicious file:

Select Download above the table. The Download Options Menu appears (see Figure: Download Options Menu in the Event Viewer).

From the menu, select Event Content.

You will be able to decrypt the zip archive with the password infected. Select OK to continue.

Procedure: Download Targeted Logger Information

Follow these steps to download the data collected by the Targeted Logger:

Select the data in the ANALYSIS column for the event that you wish to review. The “Event Details” Screen appears.

Select Download at the top of the screen. The Download Options Menu for this screen appears. The options are the same as for the previous procedures, with the addition of a Targeted Logger choice if Targeted Logger collections were generated. (Note that the CSV option is equivalent to the Excel option in the previous procedures.)

From the menu, select Targeted Logger. You will receive a zip archive containing the associated log files.

Learning about BluVector Dynamic Malware Analysis in the Cloud

In addition to performing high-speed static analysis of file content, BluVector provides dynamic post analysis using a cloud-based sandbox called BluVector Dynamic Malware Analysis in the Cloud. A sandbox is a specially designed environment that can mimic an operational user host system and trick malware into running as it would on its intended target. Sandboxes are carefully instrumented to capture all of the activity that a malware sample induces into the system. The sandbox then applies a set of heuristics to determine if the sample is benign or malicious.